Leaderboard

Popular Content

Showing content with the highest reputation since 04/16/23 in Posts

-

Yes, native support for Proxmox VMs is under investigation. However, you can already use agent-based backup for Proxmox. This should allow you to back up and recover Proxmox data the way you would back up your Windows or Linux machines. We’ll share the workflow here soon. Stay tuned!6 points

-

Darn, was hoping to test it out already . The native backups in PVE are okay but lacking some key features.5 points

-

Does anyone know if NAKIVO is planning to support Proxmox backup? I'm looking for an alternative to the built-in Proxmox Backup Server4 points

-

Dear @Argon, Thank you for your honest feedback. This helps us understand what our users need and how to improve our product moving forward. We have tried to be as clear as possible that this is only an agent-based approach to backing up Proxmox VE, and wanted to inform users who may not be aware of this approach in NAKIVO Backup & Replication. As for the native Backup Server Tool, it may have some advantages but it lacks several important capabilities such as multiple backup targets (for example, cloud) or recovery options (for example, granular recovery of app objects). We are continuously working on improving our software solution, and we are investigating native Proxmox support. Thank you once again for your input. Best regards4 points

-

We’re excited to announce that NAKIVO has expanded the range of supported platforms to include Proxmox VE:4 points

-

I asked the NAKIVO sales team about Proxmox support recently. They said native support is on their roadmap but no ETA yet.4 points

-

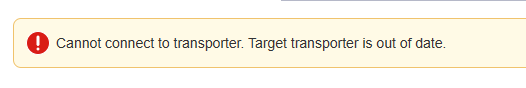

Hi, I have a problem, apparently of incompatibility of versions, I am working on the most recent version of nakivo, that is 10.11 and I have two nas devices, one with transporter version 10.8 and another with version 10.5, when I try to add them to the nodes, I get the following error. My solution is to downgrade the version to 10.8 or similar, but I can't find the resources on the official website. Could you help me get past versions of nakivo? If you guys have any other solution ideas or suggestions, I'm open to trying.2 points

-

Hello @Ana Jaas, thanks for reaching out. To be able to provide the steps to take, we need more information about your NAS devices. Could you please provide us with the specifications of your NAS devices? We'll need details such as the model, operating system version, and CPU version. This information will enable us to guide you through updating the Transporter to match the version of the Director. Alternatively, you can navigate to Expert Mode: https://helpcenter.nakivo.com/User-Guide/Content/Settings/Expert-Mode.htm?Highlight=expert mode and enable system.transporter.allow.old to address the compatibility issue. If you find these steps challenging or would prefer direct assistance, we're more than willing to schedule a remote session to help you navigate through the process: support@nakivo.com. Looking forward to your response.2 points

-

I think the developer made a fundamental mistake here. The repository should be only responsible for storage type (Disk or tape), where the data is stored (local, NAS or cloud), and how the data is stored (Compression and Encryption). The backup job should determine how the data is structured. "Forever incremental" and "Incremental with full" options should be within the job schedule section, and the retention policy determines how long the data should be kept. To determine if the data "Forever incremental" or "Incremental with full" should be the function of the backup job itself, not the function of the repository.2 points

-

Hi Tape Volume doesn't move to original slot when it removed from drive.2 points

-

1 point

-

Thanks, I really, appreciate your aportatation, for this problem and I have resolved. Again, thak you.1 point

-

1 point

-

I sincerely hope that you can fully support Proxmox. I would prefer to use a Nakivo solution (which has satisfied us for a long time on ESX) rather than switching to Competitor.1 point

-

1 point

-

1 point

-

Hi, I'm new to this forum so excuse me if this topic already have been discussed (didn't find any though). My company is hosting and helping multiple customers, both in our hosting and onprem environments. I've been working with Nakivo before but only on onprem installations with one setup per customer (local vcenter and esxi access). But what I now would like to be able to do is to have one central director that we manage multiple customers in. From what I can see in the docs the setup should then consist of a Director with transport and repos centrally, transport agent at the customer site with Direct connect enabled. Agents on physical machines. But the main problem is that we need to open ports in the customer FW to the transport agent server. And if we have a customer that doesn't allow access to vCenter and only allow agent based backups we still need to add a transport and open FW to that. Is that correct? Is there no alternative to have agents talk to our transport and/or director centrally without opening any firewall inbound on the customer side? Hope my question makes sense.1 point

-

1 point

-

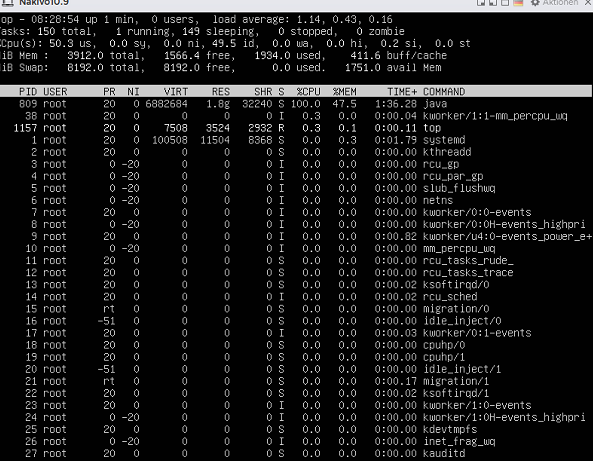

Hi there, I'm optimizing my nakivo configuration right now. I wonder how to determine what is the best max load for a transporter. I guess bottleneck will not be the network because network speed is usually under 5 Gb and max speed I saw is 16 Gb (once). Cpu usage is like 25%. Free memory is over 2 GB. I/O seems not so high but activity is low for now. So using a dedicated transporter with 4 vcpu and 4 GB of ram, what is the optimum load ? Regards1 point

-

+1 for enabling Nakivo to work with XCP-NG/XOA. I really want to get off the vSphere platform now that Broadcom keep tightening the screws. By the looks of things, there are a lot of people in the same boat (life raft!). This is a huge business opportunity for Nakivo to get the early mover advantage on XCP support, and would help Nakivo keep their existing user base of those users leaving, or looking to leave, VMware.1 point

-

It looks like it is not possible to store the M365 backups to a cloud repository as S3 or Wasabi. Or did I miss something? The thought storing the M365 data on-premise is different as some competitors and a nice option. But the time has come where hybrid worlds and cloud-to-cloud "backups" make sense. Is a cloud repository for M365 in the pipeline? I don't think the workaround storing M365 data to a local windows repo and making an additional "backup" of that repo to the cloud is a good idea...1 point

-

1 point

-

Hi, where can i download nakivo 10.11.0 for synology dsm 7 and higher? Regards, Oliver1 point

-

I would like to ask a few questions regarding the site recovery VMware VM script - where could be stored ths script on a director or inside the VM ? -what username/password should be used VMware or the guest ? -Is it necessary to have any open ports between director and the VM or communication between the director and host is sufficient ? Thank you1 point

-

Thanks for your reply. I am using this in Web UI. Will wait for the same feature in API.1 point

-

The current software doesn't have the option for us to control the number of Synthetic full backups. The best option I can find is to create a Synthetic full every month. Most of our clients are required to store backup data for at least one year. Some clients even require to save backup for 3-10 years. With the current software option, one-year retention will create 12 Synthetic full backups. Even Synthetic full backup doesn't really perform a backup from the protected server to save backup time, it still constructs a full backup from the previous incremental and eat up the storage space. If one full backup takes 1TB, one-year retention means we will need at least 12TB to store the backup. Can Nakivo make a new feature to set the maximum number of Synthetic full backups so we can control how many full backups are in the entire backup storage? We have clients with large file servers that a full backup is more than 3TB.1 point

-

We're using Nakivo 10.8.0. It's provided as a Service by our hosting provider - they maintain the console, we have a dedicated transporter and we collocate a Synology NAS for storage of our backups. I have a file server backup job that required about 3.75TB for a full backup, and generates incrementals of a couple hundred gig/night. I've got the backup job set to run a full every weekend and then incrementals during the week. I have enough space on the NAS to keep about a month's worth of nightly recovery points. I have retention settings currently defined on the job that will eventually exceed my available space, but I haven't hit the wall yet. I'd like to start using a backup copy job to S3 to stash extra copies of backups and also build a deeper archive of recovery points. I'd like to keep about 2 weeks worth of nightly recovery points in S3, then 2 more weeks of weekly recovery points, then 11 more monthly recovery points, and finally a couple yearly. I built a backup copy job with the retention settings specified, and then ran it. My data copy to S3 ran at a reasonable fraction of the available bandwidth from my provider. However, once the first backup was copied up to S3 in 700+ 5GB chunks, it took an additional 60+ HOURS to "move" the chunks from the transit folder to the folder that contained that first backup. All told, it was 100 hours of "work" for the transporter, and only the first 35ish were spent actually moving data over the internet from our datacenter to S3. Either I've failed spectacularly at setting up the right job settings (a possibility I'm fully prepared to accept - I just want to know the RIGHT way), or the Nakivo software uses a non-optimal set of API calls when copying to S3, or S3 is just slow. I've read enough case studies and whatnot to believe that S3 can move hundreds of TB/hour if you ask it correctly, so 60 hours to move <5 TB of data seems like something is wrong. So, my questions are these - what's the best way to set my retention settings on both my backup job and my backup copy jobs so that I have what I want to keep, and how do I optimize the settings on the backup copy to minimize the amount of time moving data around? The bandwidth from my datacenter to S3 is what it is and I know that a full backup is going to take a while to push up to Uncle Jeff, but once the pieces are in S3 what can be done to make the "internal to AWS" stuff go faster?1 point

-

So it turns out that if Malware is found in a user's OneDrive you get an alert. The issue is that there's no information included about which file or folder is affected or what type of malware was detected. Has anyone dealt with this? Does Nakivo exclude the affected files from the backup? How can one flag things if it's a false positive? I'm not a Windows person so any pointers on how to deal with malware on a user's OneDrive would be most welcome too.1 point

-

I have on Server where NAKIVO is installed and i need to back this Server up beause it is the Server where the other Services run. How i can add thhis Server to the Inventory?1 point

-

Hi, where can i download nakivo 10.9.0 for synology dsm 7? Regards, Oliver1 point

-

Hi, this link doesn't work. Can you provide the correct link? I need this exact version for manual install: 1.0.9.0.75563. Latest version in synology package center is 10.8.0.73174. Thank you.1 point

-

Hi everyone, I made one primary full backup and 6 increments every day in a week to NAS during the night. I want to make every day full backup copy from NAS to tape during a day, when primary backup is not proceeded. How can I achieve this bahviour? Is it possible without making every time full backup to the NAS?1 point

-

Nakivo B&R has the great option to backup to WASABI cloud storage. However these backups are unencrypted. Is it possible to add option to encrypt those backups?1 point

-

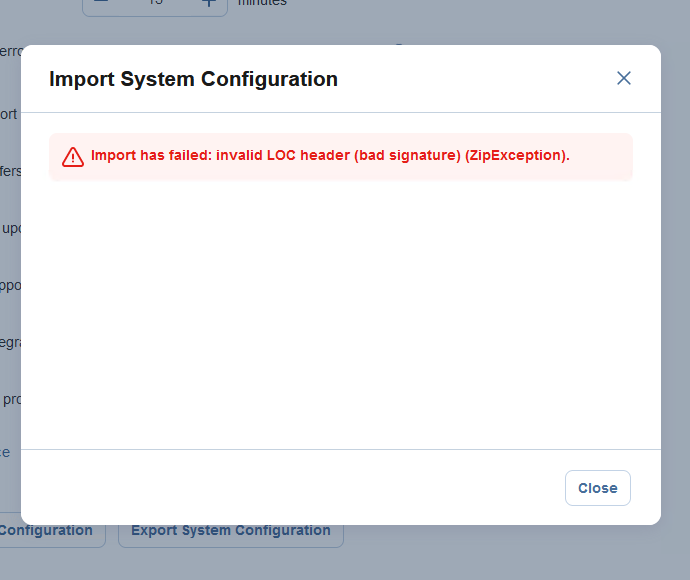

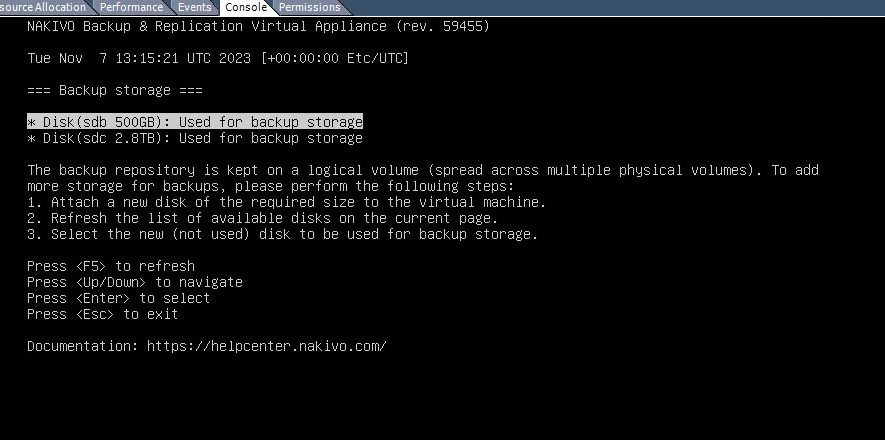

Good morning, I'm sure that I've made an error but I hope that someone can help me in this situation. Scenario: Nakivo Backup & replication Virtual Appliance - Current version: 10.7.2.69768 - 2 disks for storage backups (500Gb default + 1000Gb added some times ago) I know the procedure to add more storage for backups ( 1 attach a new disk... - 2 refresh the list of available disks - 3 select the new disk) but I've made a mistake. I've increased the size of the 1000Gb disk in the vSphere Client (now 2.800Gb) and restarted the application. When I go on the "Onboard repository storage" I see disk size 2,8Tb but the Onboard Repository on the web interface is not updated. Is there a way to solve this situation? Thanks Elia1 point

-

Hello, we are evaluating a switch from our existing M365-Backup solution to Nakivo. Are there any plans to support the backup of Exchange Online Mailboxes Modern Authentication From the Release Notes of 10.9 I saw that the backup of Exchange Online Mailboxes is not supported yet. In the user guide I found, that the backup user need to be excluded from conditional access. I know that in the past there where several limitations by MS, with APIs not supporting modern authentication, but as far as I know, those have been fixed. Is this probably already implemented for the upcoming 10.10 or are there any other plans? Regards, Thomas Kriener1 point

-

I currently have a Nakivo installation backing up to Dell Data Domains but transitioning over to Synology FS6400s. The transporters that control my backup repositories have the 'ddboostfs' plugin installed and mount the appropriate storage locations on the local Data Domain. I recently got my new Synology FS6400s online in my lab. After some web searching, I was able to find out how to manually update the version of the Nakivo Transporter service to 10.9; only 10.8 is available in the public channel and it's incompatible with a 10.9 director. The fact that I had to do this already makes me weary of running the transporter service on the array itself, even though we specifically purchased the arrays with 128GB RAM to ensure adequate resources. Anyway, I created a forever incremental repo on each array and testing was successful; though my data domains use the incremental with full backup and rely on DDOS for dedupe. However, I am starting to question the methods of which I was going to use my Synology to replace my Data Domains. I wasn't aware that the Synology's support data deduplication on the array itself, which is why I loaded the Nakivo Transporter service in order to reduce my storage footprint. Using Nakivo's deduplication requires a period of space reclamation that is historically dangerous should the process be interrupted. Given what I now know, I'm wondering how others have used Synology arrays to store their backup data? I myself question the following: 1. Should I still use the Nakivo Transporter service on the array, configure my repo(s) for incremental with full backup, and turn on Synology's data deduplication process for the volume hosting my backups? 2. Should I consider forgoing the Transporter service on the array, configure NFS mount points to my virtual transporter appliances, and turn on Synology's data deduplication process for the volume hosting my backups? 3. I created a folder (/volume1/nakivo) without using the Shared folder wizard where my repo stores its data. I've seen prompts indicating that information stored outside of "Shared Folders" can be deleted by DSM updates. Will future patches potentially cause my nakivo data to be removed since I created the folder myself? I have no reason to share out the base /volume1/nakivo folder if the Transporter service is local to my array. Other advise on using Synology and Nakivo together is much appreciated. P.S. After VM backups are taken, we perform backup copies across data center boundaries to ensure our backup data is available at an off-site location for DR/BCM purposes. By placing the transporter service on the array itself, we figure this replication process will be mainly resource driven at the array level (with network involvement to move the data) which will lighten our virtual transporter appliance footprint. Currently our replication process must send data from the local DD to a local transporter VM that then sends the data across an inter-dc network link to a remote transporter VM that finally stores the data on the remote DD. The replication process uses a good chunk of virtual cluster resources that could be better directed elsewhere.1 point

-

Hello, thanks for your explanation. I'm not able to provide any support bundle, because we are not yet using the M365 backup from Nakivo. I understand from the following statement of the 10.9 Release notes in section "Microsoft 365", that the backup of "online archive mailboxes", is not supported in 10.9: Backup and recovery of guest mailboxes, public folder mailboxes, online archive mailboxes, Group calendars, and Group planners, distribution groups, mail-enabled groups, security groups, resource mailboxes, read-only calendars, calendars added from Directory, event messages (that is, "#microsoft.graph.eventMessageRequest", "#microsoft.graph.eventMessage"), and outbox folders are not supported. This is were I would like to know if this is on the roadmap or may be already in the 10.10 version. Thanks, Thomas Kriener1 point

-

Good morning I have a problem the jobs which have expired are still on the physical. expired jobs are not deleted and my disk space is full thank you for your help1 point

-

Hello, i have Synology DS1618+ with the latest DSM (DSM 7.2-64570 Update 3). I've tried the above procedure, but the update doesn't work and the virtual appliance can't connect to the transporter because it keeps reporting that the Transporter is Out Of Date. When will the update package be available? Either for automatic or manual update? Can you help me, Please? Thanks Dalibor Barak1 point

-

I am getting the below error message when i try to add some physical machines from the manager. Any ideas? An internal error has occurred. Reason: 1053 (SmbException).1 point

-

I did verify SMB 2 was running and the C$ admin and IPC are showing as shared.1 point

-

Ok, thank you for the information. That's disappointing as now I need to find a download for the Windows version of NAKIVO, https://d96i82q710b04.cloudfront.net/res/product/NAKIVO Backup_Replication v10.9.0.76010 Updater.exe but the one that matches the transporter version available on Synology. Alternatively do you have the download link like above but for just the Synology Transporter version so I can install it via SSH? Specifically it's transporter version 10.8.0.n72947 Thanks in advance1 point

-

Can we run the 10.9 installation on the Synology using the 'Manual Install' in the Package Centre instead of using SSH?1 point

-

@Giovanni Panozzo On behalf of the community, I want to extend my gratitude to you for your valuable contributions and for making our Forum a better place with your insightful posts. We genuinely appreciate your active involvement and look forward to more insightful discussions and solutions from you in the future.1 point

-

I had the same problem: when mounting a NFS share from the Nakivo GUI, only the NakivoBackup directory was created, and then the GUI mount procedure failed with the same error. I solved it removing maproot/root_squash options on the NFS server. In my case it was FreeBSD 13 /etc/exports Initial /etc/exports which prevented nakivo to set permission on files: /vol1/nakivo1 -maproot=nakivouser -network=192.168.98.16/28 changed to the following working one /vol1/nakivo1 -maproot=root -network=192.168.98.16/281 point

-

We use Nakivo v.10.8. We have a Win2019 virtual machine (Windows File Server 2019) installed on VMware ESXi 7. On Win2019 there is an SMB resource larger than 400 GB Nakivo makes backups according to the schedule, we normally restore files if necessary. Questions: 1 - will Nakivo back up this VM properly, if we enable data deduplication, which is built into the Win2019 OS? 2 - will Nakivo back up this VM normally, if we enable Win2019 folder compression (directory-properties-advanced-compress contents to save disk space)?1 point

-

1 point

-

Starting on Tuesday 5-30-23, I started getting emails from Nakivo saying "NAKIVO Backup & Replication v10.9 is out, " There is no mention of it on the Nakivo download site. I tried reaching out to support but they asked me to send in a support bundle (which obliviously makes no sense). Any thoughts from Nakivo?1 point

-

Hey everyone! I've been using Microsoft 365 for my business, and I want to make sure I have proper backups in place to protect my data. I recently came across the NAKIVO blog, and I was wondering if anyone here could help me understand how to set up backups in Microsoft 365. Any guidance would be appreciated!1 point

.thumb.jpg.912b3e06ab501bee4265e599dfdc4730.jpg)

.thumb.png.06d8fba2efcf99a2e716d853feb8fb0f.png)