Leaderboard

Popular Content

Showing content with the highest reputation since 04/25/23 in Posts

-

Yes, native support for Proxmox VMs is under investigation. However, you can already use agent-based backup for Proxmox. This should allow you to back up and recover Proxmox data the way you would back up your Windows or Linux machines. We’ll share the workflow here soon. Stay tuned!6 points

-

Darn, was hoping to test it out already . The native backups in PVE are okay but lacking some key features.5 points

-

Does anyone know if NAKIVO is planning to support Proxmox backup? I'm looking for an alternative to the built-in Proxmox Backup Server4 points

-

Dear @Argon, Thank you for your honest feedback. This helps us understand what our users need and how to improve our product moving forward. We have tried to be as clear as possible that this is only an agent-based approach to backing up Proxmox VE, and wanted to inform users who may not be aware of this approach in NAKIVO Backup & Replication. As for the native Backup Server Tool, it may have some advantages but it lacks several important capabilities such as multiple backup targets (for example, cloud) or recovery options (for example, granular recovery of app objects). We are continuously working on improving our software solution, and we are investigating native Proxmox support. Thank you once again for your input. Best regards4 points

-

We’re excited to announce that NAKIVO has expanded the range of supported platforms to include Proxmox VE:4 points

-

I asked the NAKIVO sales team about Proxmox support recently. They said native support is on their roadmap but no ETA yet.4 points

-

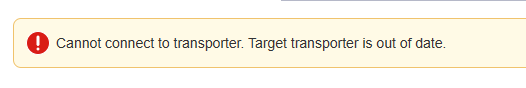

Hi, I have a problem, apparently of incompatibility of versions, I am working on the most recent version of nakivo, that is 10.11 and I have two nas devices, one with transporter version 10.8 and another with version 10.5, when I try to add them to the nodes, I get the following error. My solution is to downgrade the version to 10.8 or similar, but I can't find the resources on the official website. Could you help me get past versions of nakivo? If you guys have any other solution ideas or suggestions, I'm open to trying.2 points

-

Hello @Ana Jaas, thanks for reaching out. To be able to provide the steps to take, we need more information about your NAS devices. Could you please provide us with the specifications of your NAS devices? We'll need details such as the model, operating system version, and CPU version. This information will enable us to guide you through updating the Transporter to match the version of the Director. Alternatively, you can navigate to Expert Mode: https://helpcenter.nakivo.com/User-Guide/Content/Settings/Expert-Mode.htm?Highlight=expert mode and enable system.transporter.allow.old to address the compatibility issue. If you find these steps challenging or would prefer direct assistance, we're more than willing to schedule a remote session to help you navigate through the process: support@nakivo.com. Looking forward to your response.2 points

-

I think the developer made a fundamental mistake here. The repository should be only responsible for storage type (Disk or tape), where the data is stored (local, NAS or cloud), and how the data is stored (Compression and Encryption). The backup job should determine how the data is structured. "Forever incremental" and "Incremental with full" options should be within the job schedule section, and the retention policy determines how long the data should be kept. To determine if the data "Forever incremental" or "Incremental with full" should be the function of the backup job itself, not the function of the repository.2 points

-

Hi Tape Volume doesn't move to original slot when it removed from drive.2 points

-

I'm testing O365 backup, and the first backup is endless. Many mailboxes failed, and I see no data traffic... so I have to cancel the job and start it again. It took me more than 15 days to backup 20 mailboxes, and still I am not finished. Does anyone have experience with this?1 point

-

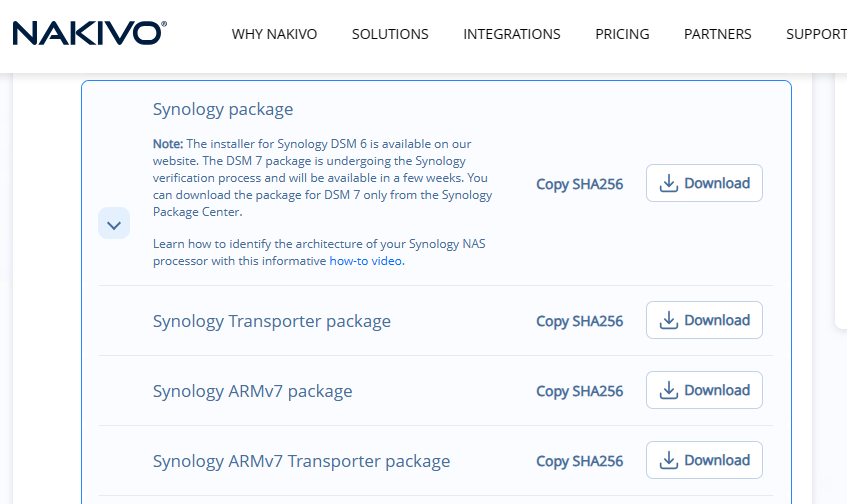

Hello, thanks for answering... I was able to upload a transporter version on my QNAP device, it worked and is currently working. I tried to do the same with the sysnology transporter, download the updater and upload it for manual installation, but the following text box appeared... "This package requires DSM 7.0-40000 or later." My sysnology device has dsm 7.2.1 update 4. I think this error should not appear on my DSM version is within the DSM range that appears in the message. The updater that I downloaded is from the official nakivo website. I downloaded and tried two updaters and with both I got the same message. Sysnology Transporter package. Sysnology ARMv7 Transporter package. Help me pleaseeeee :(1 point

-

Hi Guys I'm having trouble exporting settings from Nakivo 10.2 and importing to version 10.11. I would like to reinstall version 10.2 to perform this action, but I can't find any repository of old versions. Is there no possibility to download previous versions of Nakivo?1 point

-

I am encountering a significant issue with a "Clone Backup to Backblaze" job that I've set up. The task is designed to transfer all my backups, totaling 1.4 TB, to Backblaze using a 275mbit/s internet connection. Based on my calculations, I anticipated the job would complete within 13-14 hours. However, after 43 hours and 30 minutes, the progress is only at 88.5%. Initially, the transfer rate peaks between 200-300mbit/s, which aligns with my expectations. But, a few hours into the job, the speed dramatically drops to as low as 0.01 mbit/s and stays minimal for extended periods, vastly underutilizing my available bandwidth as confirmed by speedtest.net results. Additionally, there's a scheduling conflict that exacerbates the situation. While the "Clone Backup to Backblaze" job is ongoing, the original backup process starts, writing data to the same source targeted by the clone job. I've tried to mitigate this by scheduling the clone job to run over the weekend, ensuring it has ample time to complete before the original backup resumes on Monday. Despite these adjustments, the issue persists. Could you please provide guidance or solutions to address the following concerns? The drastic fluctuations in data transfer speed, particularly the periods of significantly reduced speed. Strategies to prevent scheduling conflicts between the clone and original backup jobs, ensuring both can complete without interference. Thank you for your assistance.1 point

-

Live Webinar: The Ultimate Guide to VM Backup and Recovery with NAKIVO Special Offer for Attendees *Attend the webinar and get a chance to win: $50 Amazon eGift Card in one of three lucky draws during the webinar. $25 Amazon eGift Card for completing a quick post-webinar survey. Learn more Protecting data in virtual environments requires a thorough understanding of potential threats and vulnerabilities and a dedicated data protection solution for virtualized workloads. Register for our upcoming webinar, where we discuss the threat landscape and best practices you need to protect your VMs. Discover how NAKIVO backup for virtualization platforms can provide the optimal solution for your data protection needs. What it covers: Overview: Potential threats to virtual environments Tools: NAKIVO backup for virtualization platforms Tips: Best practices for data protection in virtual environments. Real-life Scenarios: Case studies of customers achieving their VM data protection goals. Technical Demo: Showcasing NAKIVO Backup & Replication capabilities for virtual workload protection. Q&A session: Insights from experts in virtual environment protection. When to attend Tuesday, March 5, 2024 Americas: 11 AM – 12 PM EST EMEA: 5 PM – 6 PM CET REGISTER NOW *Terms and conditions apply1 point

-

Hello to everyone like many others, we are currently looking for alternatives to vmware. Our current favorite and that of many others we have seen is XCP-NG in combination with XOA. Is there any support planned from Nakivo? Kind regards Alex1 point

-

1 point

-

Following up on your query, we recommend that you set up NAKIVO Backup & Replication in multitenant mode and create remote tenants for managing multiple customers through one central Director. Here's a quick guide: - To add customers as remote tenants, you should install an independent instance of NAKIVO Backup & Replication with its own license at each remote site. - Ensure the cloud-based multitenant NAKIVO Backup & Replication is accessible via a public IP and two ports for remote access. - Manage all tenants from the central multitenant installation. Each remote site remains independent, ensuring jobs continue even with connectivity issues. - No need to open any ports on the customer's side. - This setup addresses your concerns about firewall configurations while offering centralized management. Please don't hesitate to contact our engineers if you need a demo or assistance.1 point

-

+1 for enabling Nakivo to work with XCP-NG/XOA. I really want to get off the vSphere platform now that Broadcom keep tightening the screws. By the looks of things, there are a lot of people in the same boat (life raft!). This is a huge business opportunity for Nakivo to get the early mover advantage on XCP support, and would help Nakivo keep their existing user base of those users leaving, or looking to leave, VMware.1 point

-

1 point

-

1 point

-

Hi, I have searched the API documentation to clone Job and could not found it. Can anyone share the code to clone a Job using API1 point

-

We have an issue where our transporters are not passing data at the expected 1GB rate. I have reached out to Nakivo with no response yet but wasn't sure if there was some sort of software hard limit? I have a copy job that runs at 1GB speed but anything using vmware transporter is bottoming out at around 450mb.1 point

-

Hi, Im currently backing up Exchange Online through Nakivo, I also want to keep a copy of these backups offsite for redundancy. I cant find an easy (without extra licenses also) way of doing this. The only method that i can use reliably is creating a another Backup job with a different Transporter at my offsite location and running the backup again, whilst this works well im duplicating my licence costs. Is there a better way of doing this please?1 point

-

Is there any way of having this detail added to the messages in the Director? I'd hate to leave debugging turned on permanently to have visibility of this.1 point

-

I think Nakivo should change the way the software gathers the data from the server. If the software allows you to exclude the files from the file system, that means the software is looking at the data changes in each file. This is the slowest method when doing data backup because the backup process needs to walk through every file in the file system to determine the changes. I think that is the main reason the physical server backup process takes a very long time even if only a few files get changed. It spends the most of the time walking the file with no changes. The best way to backup the data on the disk is the block-level backup. The software should just take a snapshot of the data blocks on the disk level instead of the individual file level. Slide the disk with multiple (million or billion blocks). Look for the change in each block and only backup the changed blocks. This method will not care what files has changed. Every time a file gets changed, it will change data in the blocks in which the file resides. The backup software just backs up the changed block. This should be the best way for Nakivo to speed up the backup process. I normally put the page file and the printer spooler folder or any file that I don't want to backup in another volume or disk in the Windows system. Skip that disk/volume in the backup job to reduce the change that needs to be backed up.1 point

-

Hi, my Nakivo jobs are working correctly. But I just realized, when I create a new job or if I want to edit an existing hob, I can no longer manually force a "Primary transporter" on source and target hosts (the transporters are grayed out). And only the "Automatic" option can be selected. However I need this manual selection mode to make things work because for some reason, in my setup, I need to have a transporter running on the same machine as the ESX host to make the backup perform without error. Can you let me know if there is a way to reenable manual selection of transporter. Lei1 point

-

I have done those steps, unfortunately the specific transport items are still greyed out. EDIT: I found out that the items are greyed out, if the transporter has been configured with the "Enable Direct Connect for this transporter" option. Would you mind to explain the benefits of using this option + Automatic selection, vs unselecting this option and manually configure the transporter on the backup job level?1 point

-

Hi, this link doesn't work. Can you provide the correct link? I need this exact version for manual install: 1.0.9.0.75563. Latest version in synology package center is 10.8.0.73174. Thank you.1 point

-

Hi all We are thinking of changing our current Microsoft DPM backup system for Nakivo and I have many doubts about the storage configuration and the technology used by nakivo. the idea is to have a server with ssd disks with a storage of 40TB to make copies to disk, all the disks will be SSD Enterprise. To save space, we are interested in making full syntentic copies of our VMs, at this point is where my doubts begin after the experience with Microsoft DPM - What file system does Nakivo use for full synthetic backups? - It is necessary to have ReFS to be able to perform full synthetic backups? (Do you use nakivo refs for block cloning?) - There is some guidance for the implementation of the file system underlying the Nakivo repository. I mean things like interleave size, block size, file system. Our idea is to set up the server with Windows using Storage Spaces to be able to expand the pool if needed. My experience indicates that without an efficient storage configuration, random write backup speed drops dramatically. The Competitor forum is full of posts with performance issues and the cause is undoubtedly poorly configured storage. Refs uses a large amount of memory for the deletion of large files such as a purge of old backups. I have seen it use up to 80GB of RAM just to perform the purge and this is due to storage performance. ReFs uncontrolled metadata restructuring tasks and cache utilization also bring down performance. Thank you very much for clarifying my initial doubts, surely after receiving your answer I will have new questions. Regards Jorge Martin1 point

-

Thank you for your answer. I'll try with the instruction reported in Section 2. Elia1 point

-

When you deploy a new instance of nakivo or create a new tenant in multi-tenant mode, the items creation order is Inventory, node then repository, but, that does not make sense if the only inventory i need to add is accessed through "Direct connect". I need to add the nodes first. Please change the creation order during the first start to node, repository then inventory or allow us to skip the inventory.1 point

-

As per another post, I am in the process of moving my Nakivo backups from older Data Domains to Synology NAS arrays. The process I plan to use is: Install Nakivo Transporter on the Synology NAS. Register Synology Transporter with Director. Create a new "local folder" repo on the Synology transporter. Configure a backup copy job to mirror the existing DD repo to the Synology Repo. Delete the backup copy job, as you can't edit the destination target of the backup job if the copy job exists, but tell it to "Keep existing backups". Edit the VM backup job to point the VMs to their new location (this is a pain when you have a lot of VMs, wish there was an easier way to do this or have Nakivo automatically discover existing backups). Run the VM backup job to start storing new backups on the Synology repo. I ran a test and this worked fine, except all of the old recovery points that were copied by the backup copy job in step #4 had their retention set to "Keep Forever". I'm guessing this occurred when I deleted the copy job but told it to keep the backups. I'm assuming this is operating as intended? With 200 VMs and each having 9-11 recovery points, I can't manually reset the retention on 2,200 recovery points to fix this. I understand why it might have gotten changed when the backup copy job was deleted, but the Director wouldn't let me re-point the backup job until those copied backups were no longer referenced by another job object. I can use the "delete backups in bulk" to manually enact my original retention policy, but that will take 3 months to run the cycle. Any ideas?1 point

-

Hello, In the meantime I opened a support case and they sent me a specific version so my backup are working again. I just have a notification that my ESXi version is not supported but I guess it will be supported in 10.10 Thanks Julien1 point

-

I am getting the below error message when i try to add some physical machines from the manager. Any ideas? An internal error has occurred. Reason: 1053 (SmbException).1 point

-

Hi, I'm using 10.8 on Linux to backup VMs from HyperV. I have several VMs with a lot of RAM like 32 or 48 Gb. When I test my restores I cannot use as a destination an host with less ram than the original VM. For example I want to restore a VM that has 48 gb of RAM on a host that has 32 GB. The process does not even start, it blocks the configuration procedure saying the host needs more RAM. I find no way to say to resize the RAM or continue anyway. This is an horrible design decision for at least a couple of reasons: - In my case I just restore the VMs and do NOT power them on, there's an option for that. Still Nakivo says I cannot restore. - In an emergency this requires you to have a full set of working hosts with at least the same RAM as you had before, and this again is an awful decision that hinders the ability to use Nakivo for real world recovery scenarios. I am in charge of the restore and if I decide to restore to an host with less RAM than the original one it should be my problem. I understand a warning from Nakivo, I cannot understand why blocking the restore. On HyperV you can create any number of VMs with any amount of RAM, then you decide which one to start or to resize. Reducing the restore options for no pratical benefit and imposing artificial limits is just silly. Is there a way to force Nakivo to continue the restore? Thanks1 point

-

1 point

-

I got answer from support and feature backup copy to tape is planed for version 10.11, expected to release around November. Grega1 point

-

I need to restore 1 file from a LINUX server. I have a Recovery Server with Ubuntu 20.04, which I've always used to do this kind of task. I am no longer able to restore. The process starts, but the JOB stops afterwards. Disks are not mounted on the linux server to be able to recover files. I collected a lot of responses in the all_logs_except_inventory.log file, the most relevant ones I put below. Nakivo version is 10.4.1 (build 59587 from 21 Oct 2021) 1 Aug 2023 at 21:00:05 (UTC -03:00)" recovery point. The backup object resides on "Onboard repository" repository. Repository UUID: "84ccb8c1-dd53-4a73-9cd8-e440d9508340". Transporter host: "10.5.1.126". Agent host: "192.168.0.150". 2023-08-14T18:52:15,696 [ERROR][Flr] Open Virtual Mount Session Dump. 2023-08-14T18:50:35,449 [INFO ][Flr] [User-1/memphis] [505b0e4c-61bc-4c22-83fa-428af0f7b7e0] Accessing "LDAP - Produção" backup object to process "Recovery point Fri, 11 Aug 2023 at 21:00:05 (UTC -03:00)" recovery point. The backup object resides on "Onboard repository" repository. Repository UUID: "84ccb8c1-dd53-4a73-9cd8-e440d9508340". Transporter host: "10.5.1.126". Agent host: "192.168.0.150". 2023-08-14T18:52:15,696 [ERROR][Flr] Open Virtual Mount Session Dump 2023-08-14T18:52:19,237 [ERROR][Flr] com.company.product.api.plugin.misc.GenericException: Transporter internal error 2023-08-14T18:52:25,551 [ERROR][Events] [User-1/memphis] rts.disk.mount.recovery.complete: DiskMountCompletedEvent{title='activity.recovery.disk.mount.complete.title.fail', description='activity.recovery.disk.mount.complete.text.fail', transient='null'} In the filesystem log I found this: Aug 14 18:37:02 srvrestore multipathd[770]: sda: add missing path Aug 14 18:37:02 srvrestore multipathd[770]: sda: failed to get udev uid: Invalid argument Aug 14 18:37:02 srvrestore multipathd[770]: sda: failed to get sysfs uid: Invalid argument Aug 14 18:37:02 srvrestore multipathd[770]: sda: failed to get sgio uid: No such file or directory Aug 14 18:37:03 srvrestore multipathd[770]: sdb: add missing path Aug 14 18:37:03 srvrestore multipathd[770]: sdb: failed to get udev uid: Invalid argument Aug 14 18:37:03 srvrestore multipathd[770]: sdb: failed to get sysfs uid: Invalid argument Aug 14 18:37:03 srvrestore multipathd[770]: sdb: failed to get sgio uid: No such file or directory1 point

-

1 point

-

I had the same problem: when mounting a NFS share from the Nakivo GUI, only the NakivoBackup directory was created, and then the GUI mount procedure failed with the same error. I solved it removing maproot/root_squash options on the NFS server. In my case it was FreeBSD 13 /etc/exports Initial /etc/exports which prevented nakivo to set permission on files: /vol1/nakivo1 -maproot=nakivouser -network=192.168.98.16/28 changed to the following working one /vol1/nakivo1 -maproot=root -network=192.168.98.16/281 point

-

1 point

-

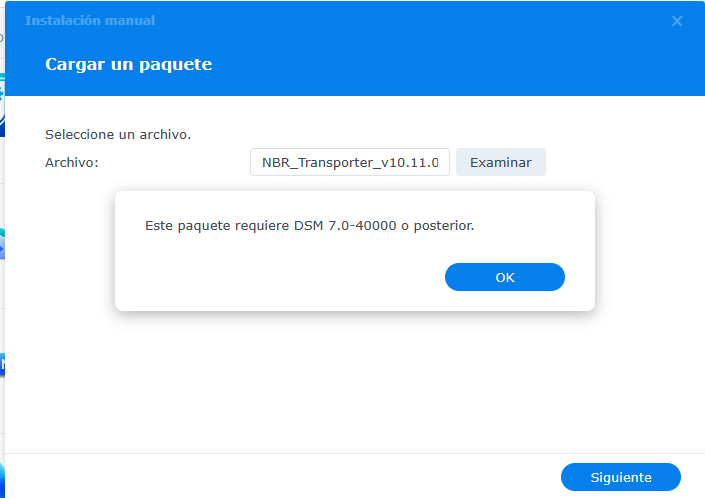

Hello again So I did double the heap size parameters twice. The first changed did not fix the problem but the second seems to have fixed it. I changed the manual IP back from .204 to .203 for testing and restarted the VM and I can now access the webUI. Final heap size: For Anyone reading this in the future. To change the heapsize. Go to the VM comsole in ESXI. 1. Manage NAKIVO services -> Start/Stop Services -> Stop both the Director and Transporter service for good measure 2. Go back to the Main Menu -> Exit to system console -> log in with your user (nkvuser in my case) 3. Sudo -i -> enter password again 4. Install nano for text editing : sudo apt -y install nano 5 type: cd / (to move to root folder) -> cd etc/systemd/system -> type: sudo nano nkv-dirsrvc.service 6. Edit the file. Move with the arrow key down to the line beginning with "Environment="SVC_OPTIONS....." and move to the right until you see the above heap size parameters. Edit the parameters. When finished press CRTL+X and then Y. Press Enter to finish. Reboot the VM, done. I hope this can help whoever in the future. Thanks for the help and have a great day.1 point

-

Hello! My virtualized environment is with Hyper-V and Windows Server 2022. I have a VM that I have a problem with the backup strategy for the cloud. It's a VM with 2TB of data. My cloud provider is a Wasabi S3 Object. All other backups of the VMs which are smaller are working fine My issue is on the 2TB data VM. The local Job is configured to do Synthetic Full every Friday. My JOB that saves the backups in the cloud is configured as a Backup Copy Job. But as the local JOB does the Synthetic Full, this copy to the cloud is taking too long, it is impacting the other JOBs. Any suggestions on backing up to cloud servers with large volume of data? What is the most efficient way to back up to the cloud?1 point

-

Hey everyone! I've been using Microsoft 365 for my business, and I want to make sure I have proper backups in place to protect my data. I recently came across the NAKIVO blog, and I was wondering if anyone here could help me understand how to set up backups in Microsoft 365. Any guidance would be appreciated!1 point

-

Another approach to consider Microsoft 365 Native Backup Features. My recommendation is to watch this useful webinar. The NAKIVO blog might have more information about it.1 point

-

Hi, We have to periodically backup a few TBs of files (20) from a NAS to tape and the amount is increasing considerably over time. What will be the proper licensing to get so the pricing will not change based on the amount of data being backed up ? Thanks1 point

-

1 point

-

I'm perfectly agree with TonioRoffo and Jim. Deduplication efficiency and incremental forever has been, from the beginning, one of the main reasons that made me choose Nakivo as the number one backup solution for my customers. The obligation to periodically perform full backups was, for some of my customers, extremely boring for obvious reasons of time and space consumption. Now I find myself having to destroy the repositories with all my backups to recreate them with the correct settings in order to be able to use them in "incremental forever" as I was always used to on previous versions from Nakivo. I think the change was indeed a bad idea.1 point

.thumb.jpg.912b3e06ab501bee4265e599dfdc4730.jpg)

.thumb.jpg.85d324dd9b11ed745206f8774b7ed46c.jpg)