Manuel Rivera

Members-

Posts

15 -

Joined

-

Last visited

-

Days Won

2

Everything posted by Manuel Rivera

-

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

Solved! I've found the problem: I think there is a config issue on default Intel QNAP TCP config (factory default): Default Receive TCP Window on Intel QNAP = net.ipv4.tcp_rmem = "4096 1638400 1638400" Default Receive TCP Window on ARM QNAP = net.ipv4.tcp_rmem="32768 87380 16777216" I think Intel's config had a too low minimal window As soon as I changed the value on the Intel to the values on the ARM, ssh data transfer went double and beyond So I tweaked the TCP Windows (send and receive), and got my beloved 1Gbps per job code (ssh root on QNAP ) sysctl -w net.ipv4.tcp_rmem="131072 524288 16777216" sysctl -w net.ipv4.tcp_wmem="131072 524288 16777216" Also, I think it's active on default, but not a bad idea forcing autotuning: sysctl -w net.ipv4.tcp_window_scaling=1 I hope it's useful If anyone has the same issue. Just be sure to include the tweaks on autorun.sh to survive reboots Thanks -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

Ok, my apologies... Seems to be a QNAP issue... Or a network issue... SSH (SCP or Rsync) transfers from any remote server to the Intel QNAP tops on 200Mpbs... The weird thing is that same SCP from the internal network have no cap. My bets were wrong, just because Nakivo Transporter must use some kind of ssh transfer method, but not his fault. Maybe an internal firewall throttling conections from outside the network? (I have all "visible" firewalls offline) I totally reset the QNAP, still not installed Nakivo, and have the cap. The other QNAP just beside this one it's performing nice. (Arm one) Any clue...? I will send a support ticket to QNAP guys... Sorry again for all the misunderstanding. -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

Just to give some extra clue to developers, due to separate configs on Transporters: Origin VMs are on ESXi 7 I can't test any other version. -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

Another revealing test: - Full Removed NAKIVO Backup from Intel QNAP. - Installed NAKIVO Transporter only on the Intel QNAP. (and updated to 10.4) - Configured ARM QNAP job to use same origin transporter as ever but changed CIFS repo to use the installed Transported above. Remember the repo is the one on the Intel via CIFS. Well... 200Mbps top again. All the time. Not using Nakivo Native on Intel QNAP, just using Transporter alone. If I change just the destination transporter to the ARM QNAP I got again full speed. So, I bet... There is a issue with the Nakivo Transporter for Intel QNAP. Let's wait the testing on another independent Intel QNAP -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

I did the test. I virtualized a Win10 on QNAP's Virtualization Station: Guess what....: Virtualized Windows 10 inside the QNAP, with only 2 CPU and 4GB, runs NAKIVO and deliver more than 3x the speed. There is lots of latency and IOWAIT due to Windows 10 swapping, but still runs much faster in total time (full backup) same job (50GB), with >300Mbps Also note that on the native Nakivo on the same nas NEVER get more than 25MB/s (~200Mbps) running a single job. More interesting is the fact that runs ALL THE TIME at that speed: Also running 2 simultaneous jobs delivers 400Mbps, 3 = 600Mbps, etc... Wondering if I'm so wrong, but I bet that something it's throttling Onboard repository on native QNAP Intel NAKIVO v 10.4 Next week we will test on another borrowed Intel QNAP with same Nakivo version, to see if we can replicate the issue. Thanks a lot -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

It's the same config, not the same repo. As both are QNAP NAS, they have internal repos. Compression level and dedup are active on both. Also I tried to use the Intel's repo on the ARMs via CIFS, and runs fine at high speeds (a little slower than in his own repo due to internal traffic between NAS, but much faster than the 200Mbps of the intel by himself) If you mean CPU usage, it's below 20% even with 3 backup jobs at a time. Those NAS aren't performing none but backups with Nakivo. And once again, think that the limit it's per job. If I perform 5 simultaneous jobs on the Intel I got the network saturated at almost 1Gbps, and less than 30% CPU load I will do a little test today. As the Intel QNAP allow virtualization, I will try to setup a virtualized Windows 10 minimal just with the Win version of Nakivo inside the Intel NAS. I know there will be much more CPU load, but I want to discard that the issue comes from the Nakivo-Intel-QNAP package if I got speeds over 200Mbps on a single job. -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

Hi Moderator "the NAKIVO Job speed depends on the speed of the source data read action, the speed of the data transfer, and the speed of the data write action. All those stages are affected by the NAKIVO Job workflow." Yes, I know, but this same Job executes on a ARM QNAP on the same network, and deliver >700Mbps on a single job. Same Origin Vms, same network, same transporters (except the onboard one), and the Intel QNAP have higher specs on CPU and storage. Also note that performing 4 jobs at a time deliver 800Mbps on both QNAPs without high loads. The issue seems a per job throttle on the Intel QNAP version of NAKIVO of any kind... We will test further with Qloudea to another intel QNAP vs Vmware backups to see if it's a replicable fault. -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

I can see upload speed on the Windows 10 Transporter, or on the CentOS7 one... Also QNAP tells me about 25MBps (~200Mbps)... same info. This QNAP is a 453D Intel Celeron quad-core, upgraded to 8GB of RAM. 4 x Seagate EXOS 6TB on RAID-5 As I informed to the Nakivo support, I have tested on another QNAP model I have here, a bit lower on specs... It's an TS-431P3 ARM quadcore, also with 8GB, but with 4x3TB RAID-5 WD Reds The other NAS (431P3) delivers on same version of NAKIVO, but the ARM version, more than 700Mbps on a single job... Also I tested on a Windows 10 install, also delivering +700Mbps on a single backup. I removed Nakivo from the 453D, reinstalled and reconfigured, but still 200Mbps per job... Also I don't know if any firewall o setting on the NAS can trigger this kind of behaviour, as any other app on the NAS reach the max speed of the network... Even tried Browser Station to run a speed test on a chrome executed on the NAS, and marks +900Mbps on upload and download That's the weird thing... Both NAS uses the same version of Nakivo, same origin and transporters... I also tried to backup on an external repo on the 453D, but same result. I'm almost sure there is some throttling enabled on a hidden corner of the config database... It's the only difference between both NAS In the 453 I switched the throttling on for some hours just to test... On the 413P I didn't Just wondering if there is any config saved on the onboard backup repository that could trigger the throttle. UPDATE: I did a full reinstall, deleting repository (ok, not deleting, renaming xD), with a full empty one, and same issue. I'm just doing backups right now using the 431P as director and the 453 repository via CIFS. (Full speed) If I do the opposite, the 453 still throttle at 200Mbps, using the 431 repo via CIFS Also commented with some support mates (not from NAKIVO team), and we all suspect a possible bug on the Intel QNAP version of NAKIVO 10.4... But hard to be sure, we don't have another Intel QNAP to test. -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

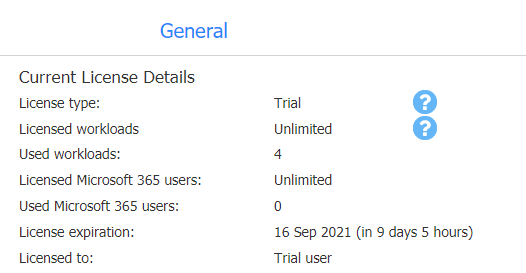

Just for checking. I'm running trial mode just now (planned to buy a PRO license when trial ends) Also I'm bronze parter. Do have trial mode any limitation? Could be this the cause of the throttling? Thanks a lot -

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

-

Limited speed for task / transporter?

Manuel Rivera replied to Manuel Rivera's topic in VMware backup

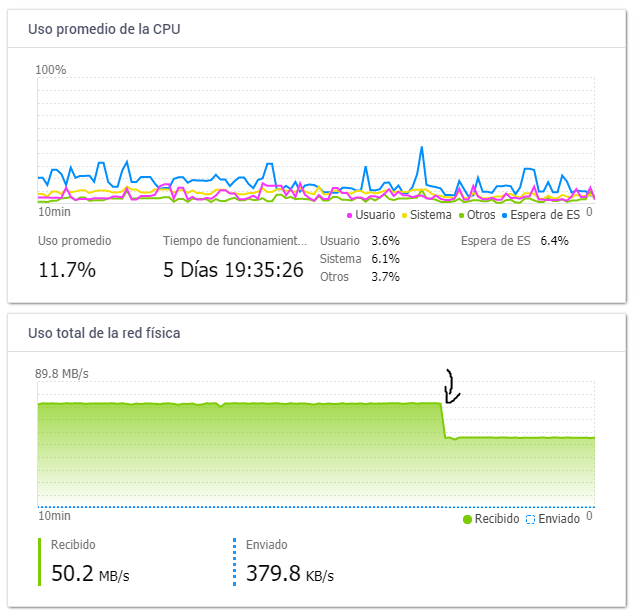

Hi Mario, Thanks a lot. The NAS It's a 4 core intel with 8GB I checked the CPU load, not even 25% on 3 concurrent backup tasks: As you can see, If I do 3 simlutaneous tasks, i got 3x200Mbps Taskmanager on the transport: But never can get more than 200Mbps on a single backup task... -

Hi everyone I have the following setup: - One ESXi server with 10Gbps connectivity - 5 VMs to backup - 1 QNAP NAS connected to a 1 Gbps network (on the office), with the local transporter running - 3 different remote transporters agents setup (just to try, all Vm's): 1 Windows 10 vm, 1 CentOS7 vm and one Nakivo Virtual Appliance up and runnning The issue I have found is that backups always performs at 200Mbps top each task. I've tried forcing each of the 3 different transporters, forced Hot-add/Network transfer, backing simultaneous VM's (if I do 5 tasks at a time then I get the 1Gbps in total) But EACH Vm backup tops at 200Mbps always. I can start backup with 3 vms at a time and get 600Mbps on the total, but cannot backup a single VM at more than 200Mbps. So I fear if I have to restore a single VM, I will get those 200Mbps limit and had to wait x5 the required time. Also setting max concurrent tasks on transporters don't help. I tried 1,2,5 or even 10 And still 200Mbps each task. I can see on the Win10 task manager each Nakivo agent delivering 190~200Mbps all the time. Also I can see on the task manager on the QNAP that each backup it's using 25MB/s (=200Mbps) Have individually tested VMs-NAS speed (got 1Gbps), transporters-NAS (also 1Gbps), ESXi-transporters (10Gbps), etc... Even Office-ISP provider speed gets the 1Gbps I always get a nice connection full speed, no matter what component I test. But when backups, I got this issue. Bandwidht trottling is disabled on all configs, of course, I have tested to active it and raise it up to 1Gbps, but no results as expected Any clue? I wonder if the onboard transporter on the QNAP have any bandwidht limitation per task, as is the only one that I cannot replace. I don't believe it a lot, because the onboard transporter can deal with the 5 simultanneous backups and write data at the 1Gbps. The NAS is new, it have no firewall/bandwith limits, and I have another QNAP NAS model with the same issue on the same network (not performing backups just now... Thanks a lot! ¿Any ideas?