-

Posts

112 -

Joined

-

Last visited

-

Days Won

5

Posts posted by The Official Moderator

-

-

7 hours ago, eljub said:

Hello,

In the meantime I opened a support case and they sent me a specific version so my backup are working again.

I just have a notification that my ESXi version is not supported but I guess it will be supported in 10.10

Thanks

Julien

Thank you for choosing NAKIVO, and we look forward to your reply and assisting you further.

-

On 10/6/2023 at 9:15 PM, eljub said:

Hello,

I just updated to VMWare 8.0 U2

Will this be integrated in Nakivo 10.10 ?

I have no more connection to my VMWare server from Nakivo

Thanks

Julien

@eljub, your information has been received and forwarded to our 2nd Level Support Team. We will follow up with you shortly. Thank you for using NAKIVO Backup & Replication as your backup solution.

-

With uptime and data availability requirements on the rise, real-time replication emerges as an invaluable disaster recovery tool. However, misconceptions and overlapping concepts often blur the distinction, making it easy to confuse with other data protection techniques.

Are you confident in your knowledge of real-time replication? Take our quiz to test your expertise and see if you can get a perfect score! https://www.proprofs.com/quiz-school/ugc/story.php?title=realtime-replication-how-to-build-a-more-resilient-it-infrastructureio

Are you confident in your knowledge of real-time replication? Take our quiz to test your expertise and see if you can get a perfect score! https://www.proprofs.com/quiz-school/ugc/story.php?title=realtime-replication-how-to-build-a-more-resilient-it-infrastructureio

-

Special Offer for Attendees *Attend the webinar and get a chance to win: $100 Amazon eGift card in a random drawing for scheduling a demo during the webinar $25 Amazon eGift Card for completing a quick post-webinar survey. Learn more

In times of disruption, swift recovery is key to minimizing downtime and data loss. It’s not just about backing up your data; it’s about ensuring you can quickly and seamlessly resume operations with minimal disruption when the unexpected happens.

Register for our upcoming webinar to explore how real-time replication can deliver recovery objectives as low as one second, effectively minimizing data loss and downtime during disruptions or system failures.

What it covers

- Overview: Understanding Real-time Replication

- Concepts: Types of replication, differences and key metrics

- Tools: NAKIVO’s Real-Time Replication for VMware Beta

- Scenarios: Data availability, operational resilience and system migration

- Live simulation: Using real-time replication to recover from a disaster scenario

- Q&A session: Insights from experts in real-time replication

When to attend

- Americas: Thursday, October 12, 11 AM - 12 PM EDT

- EMEA: Thursday, October 12, 5–6 PM CEST

BONUS: Attend and get free access to NAKIVO’s SMB Disaster Preparedness Guide.

*Terms and conditions apply

-

On 9/22/2023 at 9:49 PM, cmangiarelli said:

As per another post, I am in the process of moving my Nakivo backups from older Data Domains to Synology NAS arrays. The process I plan to use is:

- Install Nakivo Transporter on the Synology NAS.

- Register Synology Transporter with Director.

- Create a new "local folder" repo on the Synology transporter.

- Configure a backup copy job to mirror the existing DD repo to the Synology Repo.

- Delete the backup copy job, as you can't edit the destination target of the backup job if the copy job exists, but tell it to "Keep existing backups".

- Edit the VM backup job to point the VMs to their new location (this is a pain when you have a lot of VMs, wish there was an easier way to do this or have Nakivo automatically discover existing backups).

- Run the VM backup job to start storing new backups on the Synology repo.

I ran a test and this worked fine, except all of the old recovery points that were copied by the backup copy job in step #4 had their retention set to "Keep Forever". I'm guessing this occurred when I deleted the copy job but told it to keep the backups. I'm assuming this is operating as intended? With 200 VMs and each having 9-11 recovery points, I can't manually reset the retention on 2,200 recovery points to fix this. I understand why it might have gotten changed when the backup copy job was deleted, but the Director wouldn't let me re-point the backup job until those copied backups were no longer referenced by another job object. I can use the "delete backups in bulk" to manually enact my original retention policy, but that will take 3 months to run the cycle. Any ideas?

@cmangiarelli, I can recommend using the standard way to move the backup data to another repository. Did you have a chance to use a backup copy and use the retention that works for you (https://helpcenter.nakivo.com/User-Guide/Content/Backup/Creating-Backup-Copy-Jobs/Creating-Backup-Copy-Jobs.htm)?

I am looking forward to hearing from you.

-

30 minutes ago, cmangiarelli said:

As per another post, I am in the process of moving my Nakivo backups from older Data Domains to Synology NAS arrays. The process I plan to use is:

- Install Nakivo Transporter on the Synology NAS.

- Register Synology Transporter with Director.

- Create a new "local folder" repo on the Synology transporter.

- Configure a backup copy job to mirror the existing DD repo to the Synology Repo.

- Delete the backup copy job, as you can't edit the destination target of the backup job if the copy job exists, but tell it to "Keep existing backups".

- Edit the VM backup job to point the VMs to their new location (this is a pain when you have a lot of VMs, wish there was an easier way to do this or have Nakivo automatically discover existing backups).

- Run the VM backup job to start storing new backups on the Synology repo.

I ran a test and this worked fine, except all of the old recovery points that were copied by the backup copy job in step #4 had their retention set to "Keep Forever". I'm guessing this occurred when I deleted the copy job but told it to keep the backups. I'm assuming this is operating as intended? With 200 VMs and each having 9-11 recovery points, I can't manually reset the retention on 2,200 recovery points to fix this. I understand why it might have gotten changed when the backup copy job was deleted, but the Director wouldn't let me re-point the backup job until those copied backups were no longer referenced by another job object. I can use the "delete backups in bulk" to manually enact my original retention policy, but that will take 3 months to run the cycle. Any ideas?

Hello @cmangiarelli. To address your inquiry effectively, we recommend generating and submitting a support bundle to support@nakivo.com by following the instructions outlined here: https://helpcenter.nakivo.com/display/NH/Support+Bundles By doing so, you will facilitate a thorough examination of your issue by our esteemed Technical Support team.

Your cooperation in this matter is greatly appreciated.

-

10 hours ago, tkriener said:

Hello,

thanks for your explanation.

I'm not able to provide any support bundle, because we are not yet using the M365 backup from Nakivo.

I understand from the following statement of the 10.9 Release notes in section "Microsoft 365", that the backup of "online archive mailboxes", is not supported in 10.9:

-

Backup and recovery of guest mailboxes, public folder mailboxes, online archive mailboxes, Group calendars, and Group planners, distribution groups, mail-enabled groups, security groups, resource mailboxes, read-only calendars, calendars added from Directory, event messages (that is, "#microsoft.graph.eventMessageRequest", "#microsoft.graph.eventMessage"), and outbox folders are not supported.

This is were I would like to know if this is on the roadmap or may be already in the 10.10 version.

Thanks,

Thomas Kriener@tkriener Dear Mr. Kriener, thank you for your post, and I appreciate your inquiry.

It has come to my attention that you are seeking clarification regarding the inclusion of support for backing up online archive mailboxes in the forthcoming release of NAKIVO Backup & Replication, specifically in version 10.10.

While it is prudent to employ the term "planned" when discussing potential features, given the potential for evolving circumstances, I wish to affirm that this feature is unequivocally within our product roadmap.

Best regards.

-

-

13 hours ago, cmangiarelli said:

I currently have a Nakivo installation backing up to Dell Data Domains but transitioning over to Synology FS6400s. The transporters that control my backup repositories have the 'ddboostfs' plugin installed and mount the appropriate storage locations on the local Data Domain.

I recently got my new Synology FS6400s online in my lab. After some web searching, I was able to find out how to manually update the version of the Nakivo Transporter service to 10.9; only 10.8 is available in the public channel and it's incompatible with a 10.9 director. The fact that I had to do this already makes me weary of running the transporter service on the array itself, even though we specifically purchased the arrays with 128GB RAM to ensure adequate resources. Anyway, I created a forever incremental repo on each array and testing was successful; though my data domains use the incremental with full backup and rely on DDOS for dedupe. However, I am starting to question the methods of which I was going to use my Synology to replace my Data Domains. I wasn't aware that the Synology's support data deduplication on the array itself, which is why I loaded the Nakivo Transporter service in order to reduce my storage footprint. Using Nakivo's deduplication requires a period of space reclamation that is historically dangerous should the process be interrupted. Given what I now know, I'm wondering how others have used Synology arrays to store their backup data? I myself question the following:

1. Should I still use the Nakivo Transporter service on the array, configure my repo(s) for incremental with full backup, and turn on Synology's data deduplication process for the volume hosting my backups?

2. Should I consider forgoing the Transporter service on the array, configure NFS mount points to my virtual transporter appliances, and turn on Synology's data deduplication process for the volume hosting my backups?

3. I created a folder (/volume1/nakivo) without using the Shared folder wizard where my repo stores its data. I've seen prompts indicating that information stored outside of "Shared Folders" can be deleted by DSM updates. Will future patches potentially cause my nakivo data to be removed since I created the folder myself? I have no reason to share out the base /volume1/nakivo folder if the Transporter service is local to my array.

Other advise on using Synology and Nakivo together is much appreciated.

P.S. After VM backups are taken, we perform backup copies across data center boundaries to ensure our backup data is available at an off-site location for DR/BCM purposes. By placing the transporter service on the array itself, we figure this replication process will be mainly resource driven at the array level (with network involvement to move the data) which will lighten our virtual transporter appliance footprint. Currently our replication process must send data from the local DD to a local transporter VM that then sends the data across an inter-dc network link to a remote transporter VM that finally stores the data on the remote DD. The replication process uses a good chunk of virtual cluster resources that could be better directed elsewhere.

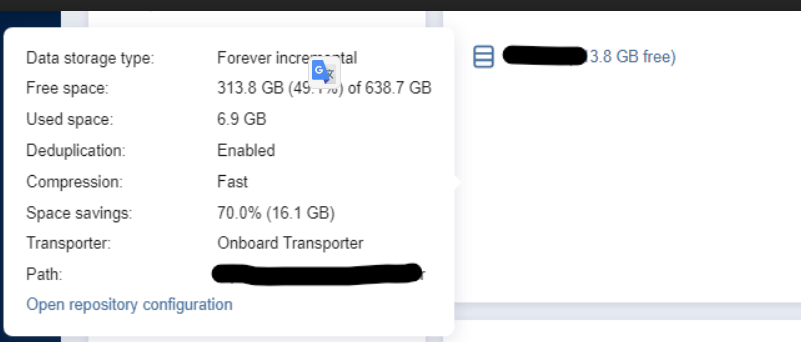

@cmangiarelli We appreciate your patience during our investigation.>>> forever incremental repo on each array and testing was successful;Please add the screenshot with the detailed information about the newly created Repository.>>> Given what I now know, I'm wondering how others have used Synology arrays to store their backup data? I myself question the following:>>> 1. Should I still use the Nakivo Transporter service on the array, configure my repo(s) for incremental with full backup, and turn on>>> Synology's data deduplication process for the volume hosting my backups?>>> 2. Should I consider forgoing the Transporter service on the array, configure NFS mount points to my virtual transporter appliances, and>>> turn on Synology's data deduplication process for the volume hosting my backups?NAKIVO never tested Synology's data deduplication on the array, and we can not predict the level of the deduplication for this implementation. Based on our experience, the deduplication on the hardware level is more effective and saves more space. We recommend using it.The correct configuration for this environment: NAKIVO Transporter Installed on Synology host and Incremental with full Repository configured as the local folder on the array where Synology's data deduplication process is turned on. The Backup Jobs must be configured to create a full Active backup one per 15-20 runs.>>> 3. I created a folder (/volume1/nakivo) without using the Shared folder wizard where my repo stores its data. I've seen prompts indicating>>> that information stored outside of "Shared Folders" can be deleted by DSM updates. Will future patches potentially cause my nakivo data>>> to be removed since I created the folder myself? I have no reason to share out the base /volume1/nakivo folder if the Transporter service>>> is local to my array.For security reasons, we highly recommend manually creating a folder for the Repository without using the Shared folder wizard. Please do not create this folder in the NAKIVO application home directory (it can be destroyed during the update process). We have no information about data damage during the DSM update action; it is better to contact Synology support with this question.We are looking forward to your feedback.Please do not hesitate to contact us if you need any further information. -

12 minutes ago, cmangiarelli said:

I currently have a Nakivo installation backing up to Dell Data Domains but transitioning over to Synology FS6400s. The transporters that control my backup repositories have the 'ddboostfs' plugin installed and mount the appropriate storage locations on the local Data Domain.

I recently got my new Synology FS6400s online in my lab. After some web searching, I was able to find out how to manually update the version of the Nakivo Transporter service to 10.9; only 10.8 is available in the public channel and it's incompatible with a 10.9 director. The fact that I had to do this already makes me weary of running the transporter service on the array itself, even though we specifically purchased the arrays with 128GB RAM to ensure adequate resources. Anyway, I created a forever incremental repo on each array and testing was successful; though my data domains use the incremental with full backup and rely on DDOS for dedupe. However, I am starting to question the methods of which I was going to use my Synology to replace my Data Domains. I wasn't aware that the Synology's support data deduplication on the array itself, which is why I loaded the Nakivo Transporter service in order to reduce my storage footprint. Using Nakivo's deduplication requires a period of space reclamation that is historically dangerous should the process be interrupted. Given what I now know, I'm wondering how others have used Synology arrays to store their backup data? I myself question the following:

1. Should I still use the Nakivo Transporter service on the array, configure my repo(s) for incremental with full backup, and turn on Synology's data deduplication process for the volume hosting my backups?

2. Should I consider forgoing the Transporter service on the array, configure NFS mount points to my virtual transporter appliances, and turn on Synology's data deduplication process for the volume hosting my backups?

3. I created a folder (/volume1/nakivo) without using the Shared folder wizard where my repo stores its data. I've seen prompts indicating that information stored outside of "Shared Folders" can be deleted by DSM updates. Will future patches potentially cause my nakivo data to be removed since I created the folder myself? I have no reason to share out the base /volume1/nakivo folder if the Transporter service is local to my array.

Other advise on using Synology and Nakivo together is much appreciated.

P.S. After VM backups are taken, we perform backup copies across data center boundaries to ensure our backup data is available at an off-site location for DR/BCM purposes. By placing the transporter service on the array itself, we figure this replication process will be mainly resource driven at the array level (with network involvement to move the data) which will lighten our virtual transporter appliance footprint. Currently our replication process must send data from the local DD to a local transporter VM that then sends the data across an inter-dc network link to a remote transporter VM that finally stores the data on the remote DD. The replication process uses a good chunk of virtual cluster resources that could be better directed elsewhere.

@cmangiarelli Hello. To address your inquiry effectively, we recommend generating and submitting a support bundle to support@nakivo.com by following the instructions outlined here: https://helpcenter.nakivo.com/display/NH/Support+Bundles

By doing so, you will facilitate a thorough examination of your issue by our esteemed Technical Support team. Simultaneously, this will enable us to escalate your feature request to our Product Development team for potential inclusion in our future development roadmap. Your cooperation in this matter is greatly appreciated, and we thank you for your understanding. Meanwhile, I requested the information to inform the community about the situation. Best regards

-

16 hours ago, tkriener said:

Hello,

we are evaluating a switch from our existing M365-Backup solution to Nakivo.

Are there any plans to support

- the backup of Exchange Online Mailboxes

- Modern Authentication

From the Release Notes of 10.9 I saw that the backup of Exchange Online Mailboxes is not supported yet.

In the user guide I found, that the backup user need to be excluded from conditional access. I know that in the past there where several limitations by MS, with APIs not supporting modern authentication, but as far as I know, those have been fixed.Is this probably already implemented for the upcoming 10.10 or are there any other plans?

Regards,

Thomas KrienerWe would like to express our gratitude for your patience as we conducted our investigation. It appears that there may be some ambiguity surrounding the question at hand.

It's worth noting that support for the backup of Exchange Online Mailboxes has been available since version 9.2, as documented in our release notes here: https://helpcenter.nakivo.com/Release-Notes/Content/v9-Release-Notes/v9.2-Release-Notes.htm

Furthermore, the inclusion of Modern Authentication support was introduced in version 10.7.1, as detailed in our release notes here: https://helpcenter.nakivo.com/Release-Notes/Content/v10-Release-Notes/v10.7.1-Release-Notes.htm

It's possible that there might be a requirement for something different or there may have been a misunderstanding when reviewing the release notes. If you require further clarification or have additional questions, please do not hesitate to reach out to our support team for assistance. We are here to ensure that you have a clear understanding of our product's capabilities. Thank you for your ongoing interest to NAKIVO Backup & Replication.

-

1 hour ago, tkriener said:

Hello,

we are evaluating a switch from our existing M365-Backup solution to Nakivo.

Are there any plans to support

- the backup of Exchange Online Mailboxes

- Modern Authentication

From the Release Notes of 10.9 I saw that the backup of Exchange Online Mailboxes is not supported yet.

In the user guide I found, that the backup user need to be excluded from conditional access. I know that in the past there where several limitations by MS, with APIs not supporting modern authentication, but as far as I know, those have been fixed.Is this probably already implemented for the upcoming 10.10 or are there any other plans?

Regards,

Thomas Kriener@tkriener Hello. To address your inquiry effectively, we recommend generating and submitting a support bundle to support@nakivo.com by following the instructions outlined here: https://helpcenter.nakivo.com/display/NH/Support+Bundles

By doing so, you will facilitate a thorough examination of your issue by our esteemed Technical Support team. Simultaneously, this will enable us to escalate your feature request to our Product Development team for potential inclusion in our future development roadmap. Your cooperation in this matter is greatly appreciated, and we thank you for your understanding.

Meanwhile, I requested the information to inform the community about the situation.

Best regards

-

On 9/6/2023 at 2:42 PM, hakhak said:

Good morning I have a problem the jobs which have expired are still on the physical. expired jobs are not deleted and my disk space is full thank you for your help@hakhak Please share a screenshot of the repository status and existing recovery points expiration status.

For example:

Repository status

Recovery points status

Can you confirm if it is the Forever incremental repository or the Inc_with_full repository? If you click on the job, go to the right pane and click on the target repository, you will get the following:

Forever Repository

Looking forward to your updates. Best regards

-

22 hours ago, Dalibor Barak said:

Hello,

i have Synology DS1618+ with the latest DSM (DSM 7.2-64570 Update 3). I've tried the above procedure, but the update doesn't work and the virtual appliance can't connect to the transporter because it keeps reporting that the Transporter is Out Of Date.

When will the update package be available? Either for automatic or manual update?

Can you help me, Please?

Thanks

Dalibor Barak

@Dalibor Barak, we appreciate your patience and interest in the NAKIVO software. To assist you with your request, we kindly ask you to send us a support bundle.

It contains log files and system information that will help us identify the problem and provide a resolution.

Please remember to include the main database and specify your ticket ID #215999.

The instructions on how to generate and send a support bundle can be found in the NAKIVO Help Center at the following link: https://helpcenter.nakivo.com/User-Guide/Content/Settings/Support-Bundles.htm

Thank you for choosing NAKIVO, and we look forward to your reply and assisting you further.

-

29 minutes ago, Ethan Shutika said:

I did verify SMB 2 was running and the C$ admin and IPC are showing as shared.

@Ethan Shutika One more hint is to connect to administrative share on a physical machine from the machine/NAS where the NAKIVO Director is installed on the OS level. Kind regards.

-

12 minutes ago, Ethan Shutika said:

I did verify SMB 2 was running and the C$ admin and IPC are showing as shared.

@Ethan Shutika This issue seems to be specific and complex, and our support team is equipped to provide you with the personalized assistance needed to resolve it effectively. They have the expertise and tools to dig deeper into the problem, analyze logs, and provide tailored solutions to ensure your NAKIVO Backup & Replication experience runs smoothly.

Please send an email to our support team at support@nakivo.com, and include as much information as possible about your setup, the error message you're encountering, and any relevant configurations. This will help expedite the troubleshooting process and ensure a swift resolution to your issue.

-

2 hours ago, Ethan Shutika said:

I am getting the below error message when i try to add some physical machines from the manager. Any ideas?

An internal error has occurred. Reason: 1053 (SmbException).@Ethan Shutika Thank you for reaching out to us. We appreciate your interest in NAKIVO Backup & Replication and are committed to ensuring your experience with our software is seamless and efficient.

In order to guarantee optimal performance and compatibility with your physical machine, we kindly request that you review the following system requirements outlined in our user guide:

SMB Protocol Version: Please ensure that your physical machine is configured with SMBv2 or a higher version of the SMB protocol. This is essential for NAKIVO to operate effectively. If you have a firewall in place, it is imperative to enable the corresponding rule for SMB-in to facilitate uninterrupted communication.

Administrative Shares: It is crucial that the default administrative shares on your physical machine are enabled and accessible over the network. This ensures that NAKIVO can perform its duties without any hindrances.

We understand the importance of these requirements, as they directly impact the functionality of our software. By adhering to these guidelines, you can be confident in the smooth operation of NAKIVO within your business environment.

Should you have any further questions or require assistance with the implementation of these requirements, please do not hesitate to contact our support team: support@nakivo.com

Your satisfaction is our top priority, and we look forward to hearing from you.

-

1 hour ago, Ethan Shutika said:

I am getting the below error message when i try to add some physical machines from the manager. Any ideas?

An internal error has occurred. Reason: 1053 (SmbException).@Ethan Shutika Hello, your information/request has been received and forwarded to our Level 2 Support Team. Meanwhile, the best way for both sides would be to generate and send a support bundle (https://helpcenter.nakivo.com/display/NH/Support+Bundles) to support@nakivo.com so our Technical Support team can investigate your issue having more details. Thank you for understanding.

-

19 hours ago, hakhak said:

Good morning I have a problem the jobs which have expired are still on the physical. expired jobs are not deleted and my disk space is full thank you for your help@hakhak Hello, your information/request has been received and forwarded to our Level 2 Support Team. Meanwhile, the best way for both sides would be to generate and send a support bundle (https://helpcenter.nakivo.com/display/NH/Support+Bundles) to support@nakivo.com so our Technical Support team can investigate your issue having more details.

Please, remember to include the main database and specify your ticket ID #215663.

Thank you for understanding.

-

5 hours ago, rimbalza said:

Hi, I'm using 10.8 on Linux to backup VMs from HyperV.

I have several VMs with a lot of RAM like 32 or 48 Gb. When I test my restores I cannot use as a destination an host with less ram than the original VM.

For example I want to restore a VM that has 48 gb of RAM on a host that has 32 GB. The process does not even start, it blocks the configuration procedure saying the host needs more RAM. I find no way to say to resize the RAM or continue anyway.

This is an horrible design decision for at least a couple of reasons:

- In my case I just restore the VMs and do NOT power them on, there's an option for that. Still Nakivo says I cannot restore.

- In an emergency this requires you to have a full set of working hosts with at least the same RAM as you had before, and this again is an awful decision that hinders the ability to use Nakivo for real world recovery scenarios.

I am in charge of the restore and if I decide to restore to an host with less RAM than the original one it should be my problem. I understand a warning from Nakivo, I cannot understand why blocking the restore. On HyperV you can create any number of VMs with any amount of RAM, then you decide which one to start or to resize. Reducing the restore options for no pratical benefit and imposing artificial limits is just silly.

Is there a way to force Nakivo to continue the restore?

Thanks

@rimbalza We extend our gratitude for choosing NAKIVO Backup & Replication software and your keen interest in optimizing your operational efficiency.

In response to your query, we would like to draw your attention to a pivotal feature within our software's system settings. The "Check for sufficient RAM on the target host for replication/recovery jobs" checkbox is a significant consideration for ensuring seamless replication and recovery processes: https://helpcenter.nakivo.com/User-Guide/Content/Settings/General/System-Settings.htm

Please note that this checkbox may be enabled or disabled based on your operational preferences. We encourage you to review your system settings and assess whether this option aligns with your current requirements. Should you require further clarification, assistance in configuration, or have additional inquiries, our dedicated support team is readily available to provide the guidance you need.

Thank you for choosing NAKIVO Backup & Replication to enhance your data protection and management endeavors.

-

3 hours ago, rimbalza said:

Hi, I'm using 10.8 on Linux to backup VMs from HyperV.

I have several VMs with a lot of RAM like 32 or 48 Gb. When I test my restores I cannot use as a destination an host with less ram than the original VM.

For example I want to restore a VM that has 48 gb of RAM on a host that has 32 GB. The process does not even start, it blocks the configuration procedure saying the host needs more RAM. I find no way to say to resize the RAM or continue anyway.

This is an horrible design decision for at least a couple of reasons:

- In my case I just restore the VMs and do NOT power them on, there's an option for that. Still Nakivo says I cannot restore.

- In an emergency this requires you to have a full set of working hosts with at least the same RAM as you had before, and this again is an awful decision that hinders the ability to use Nakivo for real world recovery scenarios.

I am in charge of the restore and if I decide to restore to an host with less RAM than the original one it should be my problem. I understand a warning from Nakivo, I cannot understand why blocking the restore. On HyperV you can create any number of VMs with any amount of RAM, then you decide which one to start or to resize. Reducing the restore options for no pratical benefit and imposing artificial limits is just silly.

Is there a way to force Nakivo to continue the restore?

Thanks

Hello, @rimbalza, your information/request has been received and forwarded to our Level 2 Support Team. Meanwhile, the best way for both sides would be to generate and send a support bundle (https://helpcenter.nakivo.com/display/NH/Support+Bundles) to support@nakivo.com so our Technical Support team can investigate your issue having more details.

Thank you for understanding.

-

3 hours ago, GregaPerko said:

I got answer from support and feature backup copy to tape is planed for version 10.11, expected to release around November.

Grega

@GregaPerko Thank you for your post. Should you need any further information, please do not hesitate to contact us.

-

On 8/28/2023 at 2:22 PM, GregaPerko said:

What about File Share (CIFS share, NFS share) backup to tape, when this will be added?

@GregaPerko Thank you for your question. When it comes to the capability of backing up File Shares (CIFS share, NFS share) directly to tape, I'd like to clarify the current status:

- If we are discussing the direct backup to tape, I want to inform you that this functionality is not currently supported on any platform. However, I encourage you to consider this as a feature request, which will be prioritized based on user demand and needs.

- On the topic of backup copy to tape, specifically in relation to supporting the NAS backup feature, I'm pleased to share that we have plans to implement this feature in one of the nearest versions. Your interest and inquiries play a crucial role in shaping our product's development. We appreciate your understanding and collaboration.

-

1 hour ago, GregaPerko said:

What about File Share (CIFS share, NFS share) backup to tape, when this will be added?

@GregaPerko Hello. The best way would be to generate and send a support bundle ( https://helpcenter.nakivo.com/display/NH/Support+Bundles ) to support@nakivo.com so our Technical Support team can investigate it and forward your feature request to our Product Development team for possible future implementation.

Thank you for understanding.

-

7 hours ago, Bedders said:

Ok, thank you for the information. That's disappointing as now I need to find a download for the Windows version of NAKIVO, https://d96i82q710b04.cloudfront.net/res/product/NAKIVO Backup_Replication v10.9.0.76010 Updater.exe but the one that matches the transporter version available on Synology.

Alternatively do you have the download link like above but for just the Synology Transporter version so I can install it via SSH? Specifically it's transporter version 10.8.0.n72947

Thanks in advance

@Bedders, Thank you for getting back to us! Let us know please the model of Synology NAS and DSM version, we will send the appropriate updater to you. We are looking forward to hearing from you.

.png.70851244d0a4cf8c528f273f3b2beec2.png)

v10.10: Introducing Real-Time Replication (Beta) for VMware

in Announcements (Releases)

Posted

NAKIVO Backup & Replication v10.10 is now available to download! The latest release adds Real-Time Replication (Beta) for VMware to the solution’s feature set. The all-new functionality can help you achieve high availability in different scenarios and recover critical workloads with near-zero data loss:

Create replicas of VMware vSphere VMs, including application data and configuration files.

Keep the replicas updated as changes are made in real time.

Fail over to your replicas almost instantly during a failure or disaster.

Achieve short RTOs and RPOs as low as 1 second.

Try the new functionality in your environment today. Download the v10.10 Free Trial and take your disaster preparedness to new heights!

DOWNLOAD NOW