All Activity

- Today

-

CharlesteAropy started following Best way to cloud backup (storage) , Configure Hyper-V replication and Amazon EC2 replication

- Yesterday

-

Raymondgop started following Feature request and Retention missing - how to switch?

- Earlier

-

Slow O365 first Backup

The Official Moderator replied to Krisko Liva's topic in Microsoft Office 365 Backup

Dear @Krisko Liva, We apologize for the issues you've encountered with your O365 backup. To help resolve your issue, please contact our NAKIVO Support Team directly (support@nakivo.com) and send us a support bundle: https://helpcenter.nakivo.com/User-Guide/Content/Settings/Support-Bundles.htm This will allow our team to investigate your situation and try to provide a fix. Please let me know if you need more information. Best regards -

I'm testing O365 backup, and the first backup is endless. Many mailboxes failed, and I see no data traffic... so I have to cancel the job and start it again. It took me more than 15 days to backup 20 mailboxes, and still I am not finished. Does anyone have experience with this?

- 1 reply

-

- 1

-

-

NAKIVO Backup for Proxmox

The Official Moderator replied to tommy.cash's topic in NAKIVO Backup for Proxmox

https://www.nakivo.com/proxmox-backup/- 9 replies

-

- nakivo

- backup

-

(and 17 more)

Tagged with:

- nakivo

- backup

- proxmox backup

- best proxmox backup

- free proxmox backup

- download free

- backup software

- backup fast

- great ui

- proxmox ve

- virtual environment backup

- proxmox disaster recovery

- proxmox snapshot

- data protection proxmox

- proxmox backup server

- vm backup solutions

- proxmox backup guide

- proxmox replication

- proxmox backup storage

-

Also needing proxmox support!

- 9 replies

-

- 1

-

-

- nakivo

- backup

-

(and 17 more)

Tagged with:

- nakivo

- backup

- proxmox backup

- best proxmox backup

- free proxmox backup

- download free

- backup software

- backup fast

- great ui

- proxmox ve

- virtual environment backup

- proxmox disaster recovery

- proxmox snapshot

- data protection proxmox

- proxmox backup server

- vm backup solutions

- proxmox backup guide

- proxmox replication

- proxmox backup storage

-

Thanks, I really, appreciate your aportatation, for this problem and I have resolved. Again, thak you.

- 6 replies

-

- 1

-

-

- incompatibility of versions

- transporter version

- (and 5 more)

-

@Ana Jaas, to update NAKIVO on your Synology DS1621+ with an AMD Ryzen 1500B, please follow our manual update guide here: https://helpcenter.nakivo.com/Knowledge-Base/Content/Installation-and-Deployment-Issues/Updating-Manually-on-Synology-NAS.htm?Highlight=DSM At step 6, download PKG for AMD Ryzen 1500B CPU here: https://maglar.box.com/shared/static/drpedy89j4652zfcagspxqe8jtydnwc7.sh For any further assistance, feel free to request a remote support session. Best

- 6 replies

-

- incompatibility of versions

- transporter version

- (and 5 more)

-

Of course... Device model: DS1621+ CPU: AMD Ryzen 1500B

- 6 replies

-

- 1

-

-

- incompatibility of versions

- transporter version

- (and 5 more)

-

I sincerely hope that you can fully support Proxmox. I would prefer to use a Nakivo solution (which has satisfied us for a long time on ESX) rather than switching to Competitor.

- 9 replies

-

- 1

-

-

- nakivo

- backup

-

(and 17 more)

Tagged with:

- nakivo

- backup

- proxmox backup

- best proxmox backup

- free proxmox backup

- download free

- backup software

- backup fast

- great ui

- proxmox ve

- virtual environment backup

- proxmox disaster recovery

- proxmox snapshot

- data protection proxmox

- proxmox backup server

- vm backup solutions

- proxmox backup guide

- proxmox replication

- proxmox backup storage

-

NAKIVO Backup for Proxmox

The Official Moderator replied to tommy.cash's topic in NAKIVO Backup for Proxmox

Dear @Argon, Thank you for your honest feedback. This helps us understand what our users need and how to improve our product moving forward. We have tried to be as clear as possible that this is only an agent-based approach to backing up Proxmox VE, and wanted to inform users who may not be aware of this approach in NAKIVO Backup & Replication. As for the native Backup Server Tool, it may have some advantages but it lacks several important capabilities such as multiple backup targets (for example, cloud) or recovery options (for example, granular recovery of app objects). We are continuously working on improving our software solution, and we are investigating native Proxmox support. Thank you once again for your input. Best regards- 9 replies

-

- 4

-

-

-

- nakivo

- backup

-

(and 17 more)

Tagged with:

- nakivo

- backup

- proxmox backup

- best proxmox backup

- free proxmox backup

- download free

- backup software

- backup fast

- great ui

- proxmox ve

- virtual environment backup

- proxmox disaster recovery

- proxmox snapshot

- data protection proxmox

- proxmox backup server

- vm backup solutions

- proxmox backup guide

- proxmox replication

- proxmox backup storage

-

@Ana Jaas, I'm sorry to hear about the difficulties you're experiencing. Please share your Synology device model, CPU version, and RAM to help our support team provide a swift resolution. You can also reach out directly to our support team at support@nakivo.com.

- 6 replies

-

- incompatibility of versions

- transporter version

- (and 5 more)

-

I really like Nakivo but your support for Proxmox is shameful. You do not support Proxmox, you just suggest that we install your Linux agent on it, which has in fact been compatible for a long time since it is a Debian Linux and which involves making a backup of the entire system in his outfit. Are you riding the wind of industry panic with VMWare? This does not support HV Proxmox and this backup should be classified under physical backup of a linux host. But the worst thing is to say that this way of doing things is better than Proxmox Backup Server... I believe that you have not studied the competitor very well before trying to sell your product as "compatible". Your solution does not at all allow you to make a serious backup of VMs and CTs independently of the HV.

- 9 replies

-

- 5

-

-

-

-

- nakivo

- backup

-

(and 17 more)

Tagged with:

- nakivo

- backup

- proxmox backup

- best proxmox backup

- free proxmox backup

- download free

- backup software

- backup fast

- great ui

- proxmox ve

- virtual environment backup

- proxmox disaster recovery

- proxmox snapshot

- data protection proxmox

- proxmox backup server

- vm backup solutions

- proxmox backup guide

- proxmox replication

- proxmox backup storage

-

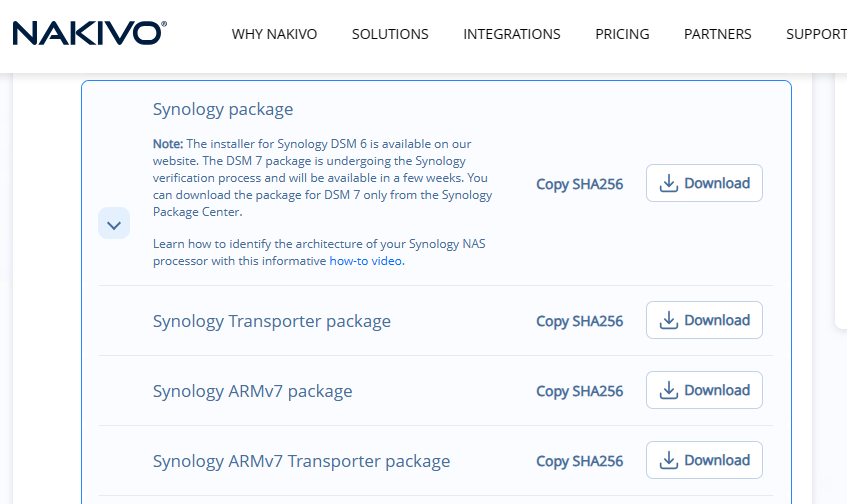

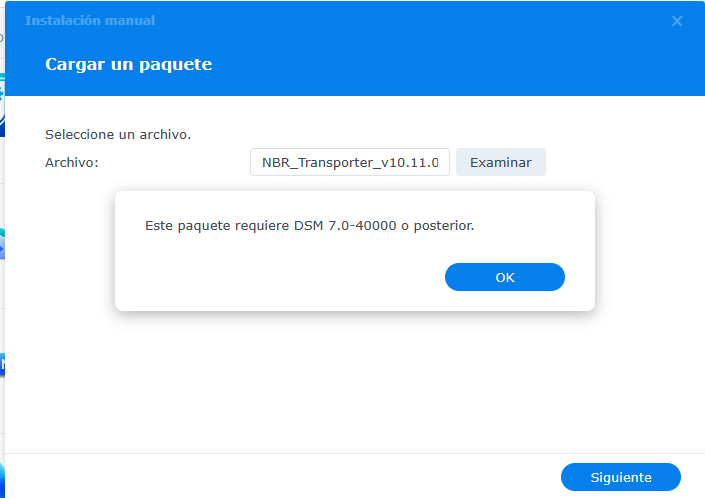

Hello, thanks for answering... I was able to upload a transporter version on my QNAP device, it worked and is currently working. I tried to do the same with the sysnology transporter, download the updater and upload it for manual installation, but the following text box appeared... "This package requires DSM 7.0-40000 or later." My sysnology device has dsm 7.2.1 update 4. I think this error should not appear on my DSM version is within the DSM range that appears in the message. The updater that I downloaded is from the official nakivo website. I downloaded and tried two updaters and with both I got the same message. Sysnology Transporter package. Sysnology ARMv7 Transporter package. Help me pleaseeeee :(

- 6 replies

-

- 1

-

-

- incompatibility of versions

- transporter version

- (and 5 more)

-

Hello, @LRAUS, We acknowledge the need for agentless support for Proxmox VE and plan to add this feature to our roadmap after investigation. However, we don't have an ETA yet. We'd appreciate knowing how important this is for you, so we can prioritize it accordingly. Your input is valuable in shaping our development efforts. Best

- 2 replies

-

- data protection

- proxmox ve

-

(and 2 more)

Tagged with:

-

Repository of previous versions

The Official Moderator replied to Clemilton's topic in General threads

Hello, @Clemilton. For the export and import of settings between Nakivo versions, it's important to note that we generally support the N-1 version approach and don't maintain a public repository of older releases. However, to assist with your specific situation, we can provide a link to download the Linux version of NAKIVO Backup & Replication 10.2. Please use the following link to access the installer: https://maglar.box.com/shared/static/ljsx7h9fyjko62nigfxqv98mqezcz82q.sh If you need further assistance, please contact our support team directly: support@nakivo.com -

Nakivo 10.2 and vCenter 7.0.3

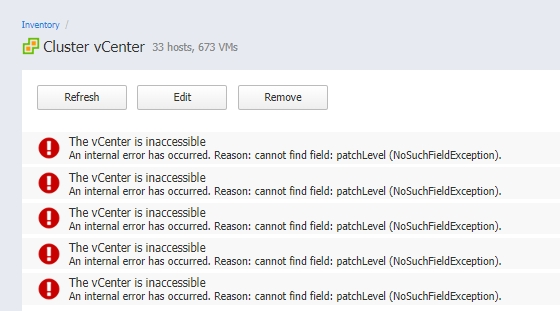

The Official Moderator replied to Clemilton's topic in General threads

Hello, @Clemilton, VMware vSphere 7.0.3 is officially supported in NAKIVO Backup & Replication starting from version 10.5.1 onwards. To ensure compatibility and to access the latest features and fixes, updating your NAKIVO software instance to a supported version is recommended. For more details on version compatibility and features, please refer to the Release Notes available in the Help Center: https://helpcenter.nakivo.com/Release-Notes/Content/Release-Notes.htm If you need further assistance, please contact our support team directly: support@nakivo.com -

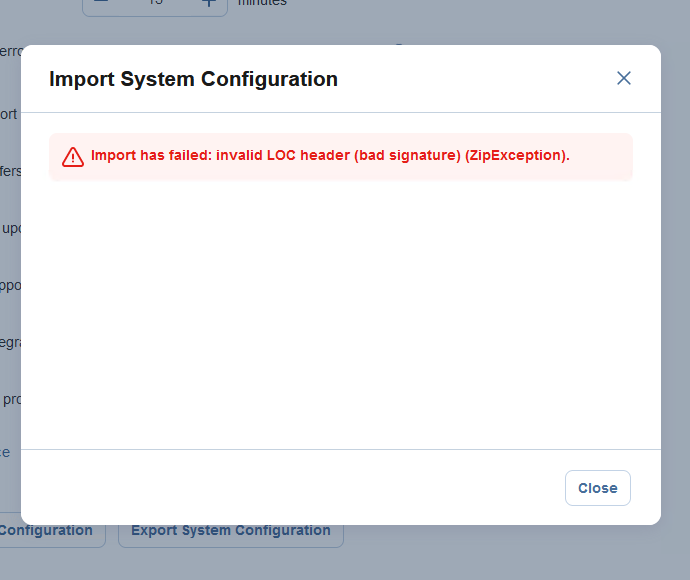

Hi @Clemilton, the error "Import has failed: invalid LOC header (bad signature) (ZipException)" suggests data corruption in the export/import of configuration file data. To troubleshoot this, please provide: 1) Steps to reproduce the issue 2) The exported system configuration file from Nakivo 10.2 3) A support bundle from the new Nakivo 10.11 installation https://helpcenter.nakivo.com/User-Guide/Content/Settings/Support-Bundles.htm Upload the files at https://upload.nakivo.com/c/uploadPackage, mentioning ticket ID #245373 in the description. As a workaround, try copying the "userdata" folder from your Nakivo 10.2 installation to the same location on the 10.11 Director server: Linux: /opt/nakivo/director/userdata We'll review the provided data and work to identify the cause of the import failure. If you need further assistance, please contact our support team directly: support@nakivo.com

-

Hi, Is agentless support coming as well? Large clusters can't use agent-based backups due to multiple VLANs, etc.

- 2 replies

-

- 1

-

-

- data protection

- proxmox ve

-

(and 2 more)

Tagged with:

-

NAKIVO Backup for Proxmox

The Official Moderator replied to tommy.cash's topic in NAKIVO Backup for Proxmox

We’re excited to announce that NAKIVO has expanded the range of supported platforms to include Proxmox VE:- 9 replies

-

- 4

-

-

-

- nakivo

- backup

-

(and 17 more)

Tagged with:

- nakivo

- backup

- proxmox backup

- best proxmox backup

- free proxmox backup

- download free

- backup software

- backup fast

- great ui

- proxmox ve

- virtual environment backup

- proxmox disaster recovery

- proxmox snapshot

- data protection proxmox

- proxmox backup server

- vm backup solutions

- proxmox backup guide

- proxmox replication

- proxmox backup storage

-

We’re excited to announce that NAKIVO has expanded the range of supported platforms to include Proxmox VE! The agent-based backup support for Proxmox VM data features: • Full and incremental, image-based backups of Proxmox VM data, applications and operating system • Backup copy to remote sites, public clouds, other S3-compatible platforms and tape • Full VM data recovery to an identical VM on the same hypervisor using bare metal recovery • Instant recovery of files and app objects to the original or a custom location Download the full-featured Free Trial of NAKIVO Backup & Replication and start protecting your Proxmox VM data today. DOWNLOAD NOW

- 2 replies

-

- data protection

- proxmox ve

-

(and 2 more)

Tagged with:

-

Hi guys. I've been searching for a few days and I can't find any reference to this error. I'm trying to import the configuration of a Nakivo 10.2 server to a new installation with Nakivo 10.11. Thanks.

- 1 reply

-

- 1

-

-

Hi Guys I'm having trouble exporting settings from Nakivo 10.2 and importing to version 10.11. I would like to reinstall version 10.2 to perform this action, but I can't find any repository of old versions. Is there no possibility to download previous versions of Nakivo?

- 1 reply

-

- 1

-

-

Hey guys. I run nakivo 10.2 with vCenter 6.7. I updated vCenter to version 7.0u3 and after the update, I receive the following error. I noticed that a few years ago there was a .sh file that was made available to fix this problem without the need to update to newer versions. I would like to know if anyone still has this file and could provide it to me.

-

AdminNakivo changed their profile photo

-

admin changed their profile photo

-

Hello @Ana Jaas, thanks for reaching out. To be able to provide the steps to take, we need more information about your NAS devices. Could you please provide us with the specifications of your NAS devices? We'll need details such as the model, operating system version, and CPU version. This information will enable us to guide you through updating the Transporter to match the version of the Director. Alternatively, you can navigate to Expert Mode: https://helpcenter.nakivo.com/User-Guide/Content/Settings/Expert-Mode.htm?Highlight=expert mode and enable system.transporter.allow.old to address the compatibility issue. If you find these steps challenging or would prefer direct assistance, we're more than willing to schedule a remote session to help you navigate through the process: support@nakivo.com. Looking forward to your response.

- 6 replies

-

- 2

-

-

-

- incompatibility of versions

- transporter version

- (and 5 more)

-

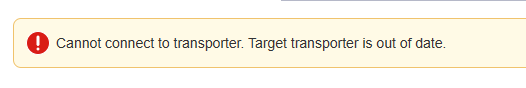

Hi, I have a problem, apparently of incompatibility of versions, I am working on the most recent version of nakivo, that is 10.11 and I have two nas devices, one with transporter version 10.8 and another with version 10.5, when I try to add them to the nodes, I get the following error. My solution is to downgrade the version to 10.8 or similar, but I can't find the resources on the official website. Could you help me get past versions of nakivo? If you guys have any other solution ideas or suggestions, I'm open to trying.

- 6 replies

-

- 2

-

-

-

- incompatibility of versions

- transporter version

- (and 5 more)

-

@SysErr Thank you for your patience as we looked into your backup copy job to Backblaze B2. We've reviewed the logs and found no errors with the "Clone-Nakivo-to-Backblaze" job. With backups to S3 storage types like AWS, Backblaze, or Wasabi, there may be periods of time when no data transfers are happening. This typically happens at the end of the backup process. At this stage, NAKIVO makes an API call to move data from a temporary "transit" folder to the repository. Depending on how much data there is, this moving step can take a while, and during this time, you won't see any data transfers happening on our side. To help with this and improve your backup job performance, we recommend setting your backup jobs to the "active" full backup type for any backups to S3 storage. Also, to prevent any overlap and ensure both your clone and original backup jobs run without issues, it's a good idea to schedule them with more time in between. This gives the clone job enough time to finish up, considering the extra time needed for data to move to the cloud storage. We're here if you need more help or have other questions. Your feedback helps us make our service better for everyone.