-

Posts

112 -

Joined

-

Last visited

-

Days Won

5

Posts posted by The Official Moderator

-

-

On 11/28/2023 at 9:37 PM, perrcla said:

Hi, this link doesn't work. Can you provide the correct link?

I need this exact version for manual install: 1.0.9.0.75563.

Latest version in synology package center is 10.8.0.73174.

Thank you.

Hello, @perrcla, as of December 2023, the latest version is 10.10.1, and it can be downloaded here:

https://www.nakivo.com/resources/download/update/

You can find the instructions for updating an existing NAKIVO Backup & Replication installation are available in our documentation here - make sure to detach any Microsoft 365 or CIFS/NFS repositories beforehand if configured:

If you have our solution installed on a Synology NAS with DSM 7, follow these instructions for a manual update:

Unfortunately, we do not have hosted downloads for older versions like 10.9 available. We recommend keeping your NAKIVO instance updated to the latest available build for enhancements, compatibility updates, and critical fixes.

Please let me know if you have any other questions.

-

On 11/29/2023 at 12:17 AM, Leij said:

I have done those steps, unfortunately the specific transport items are still greyed out.

EDIT: I found out that the items are greyed out, if the transporter has been configured with the "Enable Direct Connect for this transporter" option. Would you mind to explain the benefits of using this option + Automatic selection, vs unselecting this option and manually configure the transporter on the backup job level?

@Leij, when adding an ESXi host/cluster with the Direct Connect feature enabled, only a specific transporter (Direct Connect) will be used as the source.

If the ESXi host/cluster is added without enabling Direct Connect, you can manually specify the transporter to be used as the source.

This allows for a balanced load distribution between Jobs. Here's an overview of Direct Connect: https://helpcenter.nakivo.com/User-Guide/Content/Overview/Direct-Connect.htm

Let us know if you need more information.

-

Beta testing for NAKIVO Backup & Replication v10.11 is now underway, with new features for infrastructure monitoring, granular recovery and workflow optimization. Here are the highlights of this release:

- Alerts and Reporting for IT Monitoring: Create custom alerts for suspicious activity and get detailed reports about VMware VMs, hosts and datastores.

- Backup for Oracle RMAN on Linux: Protect your Linux-based Oracle databases using the same unified interface.

- File System Indexing: Quickly locate specific files/folders in your backups to save time during granular recoveries.

- Backup from HPE Alletra and HPE Primera Storage Snapshots: Boost performance using native storage snapshots for VM backups.

- In-Place Archive Mailbox, Litigation Hold and In-Place Hold Support: Protect additional categories in your Exchange Online mailboxes for easier compliance.

- Universal Transporter: Improve efficiency and reduce network load with a single Transporter for multiple workload types on the same host.

Try v10.11 Beta now, and share your feedback about the new features to get a $30 Amazon eGift Card!

-

On 11/25/2023 at 8:30 AM, Jorge Martin San Jose said:

Hi all

We are thinking of changing our current Microsoft DPM backup system for Nakivo and I have many doubts about the storage configuration and the technology used by nakivo.

the idea is to have a server with ssd disks with a storage of 40TB to make copies to disk, all the disks will be SSD Enterprise.

To save space, we are interested in making full syntentic copies of our VMs, at this point is where my doubts begin after the experience with Microsoft DPM

- What file system does Nakivo use for full synthetic backups?

- It is necessary to have ReFS to be able to perform full synthetic backups? (Do you use nakivo refs for block cloning?)

- There is some guidance for the implementation of the file system underlying the Nakivo repository. I mean things like interleave size, block size, file system. Our idea is to set up the server with Windows using Storage Spaces to be able to expand the pool if needed.

My experience indicates that without an efficient storage configuration, random write backup speed drops dramatically.

The Competitor forum is full of posts with performance issues and the cause is undoubtedly poorly configured storage.Refs uses a large amount of memory for the deletion of large files such as a purge of old backups. I have seen it use up to 80GB of RAM just to perform the purge and this is due to storage performance.

ReFs uncontrolled metadata restructuring tasks and cache utilization also bring down performance.

Thank you very much for clarifying my initial doubts, surely after receiving your answer I will have new questions.

Regards

Jorge Martin

@Jorge Martin San Jose , thank you for reaching out to us. In the current NAKIVO implementation, we support two types of repositories: forever incremental and incremental with full.

The incremental with full repository type requires more space due to separate full recovery points but generally exhibits better reliability during emergencies such as power outages or hardware failures.

The forever incremental repository has a different structure and may require occasional maintenance, similar to a "defragmentation" process. Both repository types are compatible with the ReFS filesystem, although we are currently investigating potential performance differences compared to other file systems like NTFS or FAT.

For larger repository sizes, especially those exceeding 10 TB, we recommend using the "Incremental with full repository" type. However, it's still feasible to create multiple repositories, each ranging from 8-10 TB, in the described scenario. If you opt for the Incremental with full repository type, we suggest using Active full backups instead of Synthetic full backups.

Although the final backup size remains the same for both types, they differ in how a full backup is created. Synthetic full backups leverage previous backup information, requiring consistency checks, while active full backups pull data directly from the VM. Feel free to explore these options and test the described layout, different repository types, and overall NAKIVO performance using our Free trial version.

Should you require further assistance, please don't hesitate to reach out us on the Forum or using this email: support@nakivo.com.

-

On 11/27/2023 at 3:38 AM, conalhughes said:

May i know if Basic License support single file restoration to email? i understand they dont support direct restoration, but does it support restore to email download?

Thanks!

Hello @conalhughes, the "download to browser or send via email' recovery method is supported in all editions of NAKIVO Backup & Replication.

At the Files step of the recovery wizard, select the files you want to recover and then select "Forward via Email" or "Download" for "Recovery type". For more details, please refer to: https://helpcenter.nakivo.com/User-Guide/Content/Recovery/Granular-Recovery/File-Recovery/File-Recovery-Wizard-Options.htm#Forwardi

If you have any questions about licensing or features, please feel free to reach out.

-

On 11/24/2023 at 12:15 PM, pol said:

Hi everyone,

I made one primary full backup and 6 increments every day in a week to NAS during the night. I want to make every day full backup copy from NAS to tape during a day, when primary backup is not proceeded. How can I achieve this bahviour? Is it possible without making every time full backup to the NAS?

Hello Pol, Yes, it is possible.

To create a full backup copy from NAS to tape every day:

1. Create a backup copy job to tape: https://helpcenter.nakivo.com/User-Guide/Content/Backup/Backing-Up-to-Tape/Backing-Up-to-Tape.htm

2. At the Schedule step of the wizard, set the job to start after the original job with a 5-minute delay.

3. At the Options step, set the full backup option to "Always: https://helpcenter.nakivo.com/User-Guide/Content/Backup/Backing-Up-to-Tape/Tape-Backup-Wizard-Options.htm

Let us know if you need further assistance.

-

1 hour ago, Leslie Lei said:

The ticket is #225141.

@Leslie Lei, currently the solution workflow for EC2 instances and physical machines is similar with proprietary change tracking. NAKIVO Backup & Replication reads the whole disk(s) and compares them with existing backup data to identify changes. In some cases, a backup can take longer and there may zero data transfer during a job run when data is being checked but not written.

The difference in transfer rate for VMware vSphere and Hyper-V VMs has to do with NAKIVO Backup & Replication leveraging native change tracking technologies for backup jobs. These technologies allow for faster job runs. Our Dev Team is working on enhancing change tracking for physical machines to increase the speed of physical machine backup. However, we cannot provide an exact ETA at this time. Thank you again for sharing your feedback. Let us know if you have any other questions.

-

13 hours ago, Leslie Lei said:

We just tested AWS EC2 and found the job runs slow. The support wasn't able to provide the answer. When backing up EC2 instant, the Nakivo director creates a target machine snapshot and Hotadd the snapshot to the transporter. The transporter reads the data in the snapshot. Because the transporter is a Linux machine and the target server is a Windows server, we can't tell if this is the trouble with the Linux machine reading the Widows NTFS volume. We changed the setup to the Physical machine backup method instead. Basically, we just treat the EC2 server as a physical server and deploy the Nakivo agent on it. Let the agent on the target server collect data from the volume and send it to the Transporter. That eliminates the possible NTFS volume and Linux system issues. The target server and the transporter are also in the same network subnet in AWS to eliminate possible network issues. However, the backup process is still slow. The slowness is not caused by the performance of the target machine, transporter or network. If I watch the backup process (incremental backup), I can see the job starts with 100Mb/s - 300Mb/s at the beginning 10 minutes of the job. After that, the speed will drop to 0.01Mb/s or even 0.0.Kb/s for almost an hour. It will resume the speed to 100-300Mb/s in the last 10 minutes. This backup process makes the backup duration much longer than it should. This issue happens in both AWS EC2 backup and the physical server backup. the VMware backup works fine. For the same-size of server, it only takes about 3 minutes to complete the incremental job for the ESXi VM. I think there is a software bug in the Nakivo software that the change block tracking doesn't work or doesn't work efficiently in the EC2 instant or physical backup job. The job should quickly skip any data blocks with no changes. In the current backup process, I can tell the job is still walking the data block with no changes. It doesn't know which block has no changes or it doesn't know how to skip them. That's why it wastes most of the time to walk through the data with no changes.

Hello, @Leslie Lei I've forwarded your message to our Level 2 Support Team.Can you please provide me with the original ticket number associated with your support request? This will help us track the progress of your case and understand if the ticket is still open or has been closed without resolution. Thank you for your feedback! -

9 hours ago, Dmytro said:

Nakivo B&R has the great option to backup to WASABI cloud storage.

However these backups are unencrypted.

Is it possible to add option to encrypt those backups?

@Dmytro The best way would be to generate and send a support bundle ( https://helpcenter.nakivo.com/display/NH/Support+Bundles ) to support@nakivo.com so our Technical Support team can investigate it and forward your feature request to our Product Development team for possible future implementation.

-

On 11/14/2023 at 9:27 AM, Slawomir said:

Hi,

where can i download nakivo 10.10.1 for synology dsm 7?

Regards,

Slawomir

@Slawomir We understand that timely support is crucial to your workflows, and we want to ensure that we do everything possible to resolve this matter. Please get in touch with us if you have any additional information or updates regarding the issue. Our Support Team is here to help you in any way we can, and we are committed to finding a solution as quickly as possible. support@nakivo.com

Thank you for choosing NAKIVO. We look forward to hearing from you soon.

-

10 hours ago, Slawomir said:

Hi,

where can i download nakivo 10.10.1 for synology dsm 7?

Regards,

Slawomir

@Slawomir NAKIVO 10.10.1 is still unavailable at the Synology Package Center, and we have no ETA yet since we are awaiting approval from their end. However, installation may be available for certain devices via SSH; please provide us with the following information from the version/model of your NAS (a screenshot as attached may be useful).

-

On 11/10/2023 at 8:44 PM, Leslie Lei said:

I think the developer made a fundamental mistake here. The repository should be only responsible for storage type (Disk or tape), where the data is stored (local, NAS or cloud), and how the data is stored (Compression and Encryption). The backup job should determine how the data is structured. "Forever incremental" and "Incremental with full" options should be within the job schedule section, and the retention policy determines how long the data should be kept. To determine if the data "Forever incremental" or "Incremental with full" should be the function of the backup job itself, not the function of the repository.

@Leslie Lei Thank you for your patience. Unfortunately, it is not currently possible to configure "Forever incremental" and "Incremental with full" options within the same job schedule. This is because they are two completely different types of repository. However, we appreciate your feedback and have filed a feature request for this capability. We will consider it for implementation in future releases based on customer demand and other factors. In the meantime, please let us know if you have any other questions or concerns.

-

On 11/10/2023 at 8:44 PM, Leslie Lei said:

I think the developer made a fundamental mistake here. The repository should be only responsible for storage type (Disk or tape), where the data is stored (local, NAS or cloud), and how the data is stored (Compression and Encryption). The backup job should determine how the data is structured. "Forever incremental" and "Incremental with full" options should be within the job schedule section, and the retention policy determines how long the data should be kept. To determine if the data "Forever incremental" or "Incremental with full" should be the function of the backup job itself, not the function of the repository.

@Leslie Lei Thank you for your valuable contribution to the NAKIVO Forum. Your insightful post has been duly noted and has been forwarded to our esteemed Product Development team for thorough consideration.

Such requests hold significant weight in shaping the future of our product, and we are committed to exploring its feasibility for potential implementation.

-

On 11/8/2023 at 5:39 PM, Babis said:

2023-11-08_16h06_42.png

>>> I had to login the Nakivo UI to actualize the Jobs by disabling the 1. User´s OneDrive backup from (1. Job) Microsoft Office 365 - Mailbox Backup Job and 2. User´s Mailbox backup from (2. Job) Microsoft Office 365 - OneDrive Backup Job.@Babis Regarding your screenshot, we see in the source tab of "Microsoft Office 365 - Mailbox Backup Job" that the root level "ArcwareMS365" account is selected. Thus, it will include all of the items inside this account.

If you only want to backup Mailboxes, please unselect the "ArcwareMS365" account and only select the "Mailboxes" container.

We are looking forward to hearing from you.

-

22 hours ago, Babis said:

It would be nice to create a job with limited Sources.

Please check image to see what I mean. (2023-11-08_16h06_42.png)

When I create a new Microsoft Account then the Microsoft Office 365 Backup Job, that I´ve split into 2 different jobs (1. Microsoft Office 365 - Mailbox Backup Job and 2. Microsoft Office 365 - OneDrive Backup Job), both Jobs update their account list with the new account on both objects (Mailbox and OneDrive) thus creating a bug which is not allowing the backup jobs to run normally.

I had to login the Nakivo UI to actualize the Jobs by disabling the 1. User´s OneDrive backup from (1. Job) Microsoft Office 365 - Mailbox Backup Job and 2. User´s Mailbox backup from (2. Job) Microsoft Office 365 - OneDrive Backup Job.@Babis, the best way would be to generate and send a support bundle ( https://helpcenter.nakivo.com/display/NH/Support+Bundles ) to support@nakivo.com so our Technical Support team can investigate it and forward your feature request to our Product Development team for possible future implementation.

-

28 minutes ago, Elia said:

Thank you for your answer.

I'll try with the instruction reported in Section 2.

Elia

@Elia Please provide the community with an update regarding the resolution of your issue. Thank you.

-

18 hours ago, Elia said:

Good morning,

I'm sure that I've made an error but I hope that someone can help me in this situation.

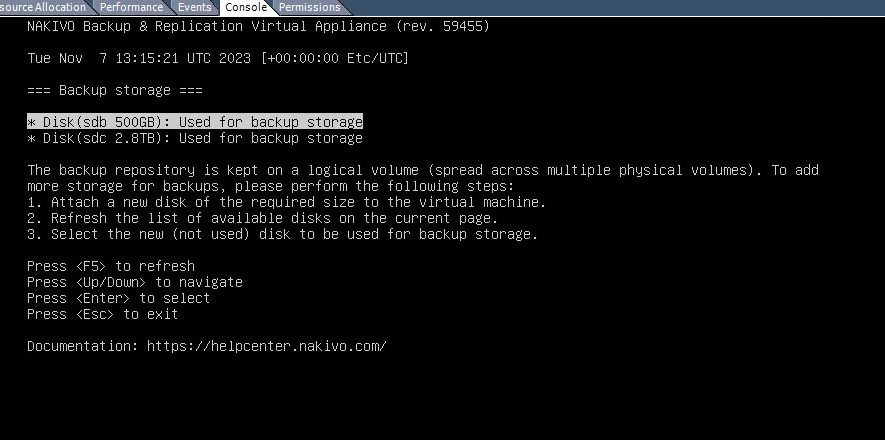

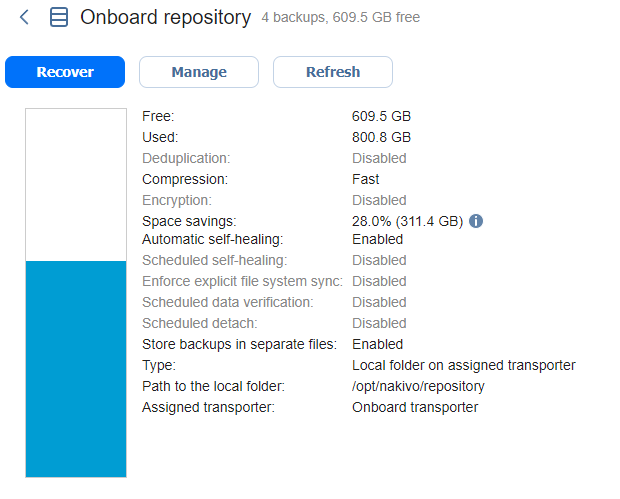

Scenario: Nakivo Backup & replication Virtual Appliance - Current version: 10.7.2.69768 - 2 disks for storage backups (500Gb default + 1000Gb added some times ago)

I know the procedure to add more storage for backups ( 1 attach a new disk... - 2 refresh the list of available disks - 3 select the new disk) but I've made a mistake.

I've increased the size of the 1000Gb disk in the vSphere Client (now 2.800Gb) and restarted the application. When I go on the "Onboard repository storage" I see disk size 2,8Tb but the Onboard Repository on the web interface is not updated.

Is there a way to solve this situation?

Thanks

Elia

@Elia Please, check the article below, Section 2: https://helpcenter.nakivo.com/Knowledge-Base/Content/Frequently-Asked-Questions/Backup-Repository/Extending-Backup-Repository-on-Virtual-Appliance.htm?Highlight=increasing

We are looking forward to hearing from you.

-

7 hours ago, Elia said:

Good morning,

I'm sure that I've made an error but I hope that someone can help me in this situation.

Scenario: Nakivo Backup & replication Virtual Appliance - Current version: 10.7.2.69768 - 2 disks for storage backups (500Gb default + 1000Gb added some times ago)

I know the procedure to add more storage for backups ( 1 attach a new disk... - 2 refresh the list of available disks - 3 select the new disk) but I've made a mistake.

I've increased the size of the 1000Gb disk in the vSphere Client (now 2.800Gb) and restarted the application. When I go on the "Onboard repository storage" I see disk size 2,8Tb but the Onboard Repository on the web interface is not updated.

Is there a way to solve this situation?

Thanks

Elia

@Elia, your information has been received and forwarded to our 2nd Level Support Team. We will follow up with you shortly. Thank you for using NAKIVO Backup & Replication as your backup solution.

-

NAKIVO Backup & Replication v10.10.1 is now available for download! This release delivers full support for the new VMware vSphere 8 Update 2, which introduced improvements to lifecycle management, GPU-based workloads, vCenter patching, DevOps and more.

Download v10.10.1 now to ensure uninterrupted data protection and disaster preparedness for your vSphere 8.0 U2 workloads.

Update now: https://www.nakivo.com/resources/download/update/

-

On 10/29/2023 at 3:46 PM, Leij said:

Hi,

my Nakivo jobs are working correctly. But I just realized, when I create a new job or if I want to edit an existing hob, I can no longer manually force a "Primary transporter" on source and target hosts (the transporters are grayed out). And only the "Automatic" option can be selected. However I need this manual selection mode to make things work because for some reason, in my setup, I need to have a transporter running on the same machine as the ESX host to make the backup perform without error.

Can you let me know if there is a way to reenable manual selection of transporter.

I would recommend you perform the following steps:

- Edit the Job and set the Transporters to "Automatic" on the "Options" tab. Save a Job;

- Edit the Job again, set the Transporters to "Manual," and set the required Transporter on the "Options" tab, save a Job.

We are looking forward to hearing from you.

-

On 10/29/2023 at 3:46 PM, Leij said:

Hi,

my Nakivo jobs are working correctly. But I just realized, when I create a new job or if I want to edit an existing hob, I can no longer manually force a "Primary transporter" on source and target hosts (the transporters are grayed out). And only the "Automatic" option can be selected. However I need this manual selection mode to make things work because for some reason, in my setup, I need to have a transporter running on the same machine as the ESX host to make the backup perform without error.

Can you let me know if there is a way to reenable manual selection of transporter.

@Leij, your information has been received and forwarded to our 2nd Level Support Team. We will follow up with you shortly.

Thank you for using NAKIVO Backup & Replication as your backup solution.

-

On 10/25/2023 at 5:36 PM, slabrie said:

When you deploy a new instance of nakivo or create a new tenant in multi-tenant mode, the items creation order is Inventory, node then repository, but, that does not make sense if the only inventory i need to add is accessed through "Direct connect". I need to add the nodes first. Please change the creation order during the first start to node, repository then inventory or allow us to skip the inventory.

@slabrie The best way would be to generate and send a support bundle (https://helpcenter.nakivo.com/display/NH/Support+Bundles) to support@nakivo.com so our Technical Support team can investigate it and forward your feature request to our Product Development team for possible future implementation.

-

15 hours ago, cmangiarelli said:

I figured out that if you detach the repo prior to deleting the backup copy job (in step#5 above), it will retain the original retention dates. I plan to use this same process when I move my production data over in a few weeks. If the retention dates get messed up though, I'll just use the "Delete backups in bulk" functionality once a week to clean my repo storage. The new arrays have a much larger storage capacity, so leaving a few extraneous recovery points around for a few weeks shouldn't cause any issues.

>>I ran a test, and it worked fine, except that all the old recovery points copied by the backup copy job in step #4 had their retention set to "Keep Forever." This happened when I deleted the copy job but told it to keep the backups. Is this the intended behavior?

It all depends on the NAKIVO retention policy used to create the recovery points in the repositories. If a new retention policy is used, the recovery points on both the source and target repositories will expire.

>>With 200 VMs, each having 9-11 recovery points, manually resetting the retention on 2,200 recovery points to fix this is not feasible. I understand why it might have changed when the backup copy job was deleted, but the Director wouldn't allow me to re-point the backup job until those copied backups were no longer referenced by another job object. I can use the "delete backups in bulk" to manually enforce my original retention policy, but that will take three months to complete the cycle.

>>I discovered that detaching the repository before deleting the backup copy job (in step #5 above) will retain the original retention dates. I plan to use this process when moving my production data over in a few weeks. If the retention dates get messed up, I'll use the "Delete backups in bulk" functionality once a week to clean my repository storage. The new arrays have a much larger storage capacity, so leaving a few extraneous recovery points around for a few weeks shouldn't cause any issues.

In this case, the best option is to use the "Delete backups in bulk" functionality. Thank you so much for your attention and participation in our community. We are looking forward to hearing from you.

-

37 minutes ago, cmangiarelli said:

I figured out that if you detach the repo prior to deleting the backup copy job (in step#5 above), it will retain the original retention dates. I plan to use this same process when I move my production data over in a few weeks. If the retention dates get messed up though, I'll just use the "Delete backups in bulk" functionality once a week to clean my repo storage. The new arrays have a much larger storage capacity, so leaving a few extraneous recovery points around for a few weeks shouldn't cause any issues.

@cmangiarelli, your information has been received and forwarded to our 2nd Level Support Team. We will follow up with you shortly. Thank you for using NAKIVO Backup & Replication as your backup solution.

.thumb.png.82dbae7434bf35927b8c8df99131d3f2.png)

Local Backup

in Inventory

Posted

@Daniel Baessler, for now, it is not possible to back up the physical machine on which NAKIVO Backup & Replication is installed because of a conflict between the already installed Transporter and the physical agent needed for backing up the machine.

The good news is that our development team is actively working on a Universal Transporter feature to resolve scenarios like the one you're facing. Our quality assurance engineers are currently testing the Universal Transporter functionality.

Once testing completes and the feature meets our release criteria, it will be included in an upcoming version update. I don't have an exact release date to share yet, but please keep an eye out for this capability.

In the meantime, feel free to reach out to support if any other questions come up: support@nakivo.com.

Thank you for being a NAKIVO customer and participating in our community!