Leaderboard

Popular Content

Showing content with the highest reputation since 08/19/19 in Posts

-

Yes, native support for Proxmox VMs is under investigation. However, you can already use agent-based backup for Proxmox. This should allow you to back up and recover Proxmox data the way you would back up your Windows or Linux machines. We’ll share the workflow here soon. Stay tuned!6 points

-

Darn, was hoping to test it out already . The native backups in PVE are okay but lacking some key features.5 points

-

Does anyone know if NAKIVO is planning to support Proxmox backup? I'm looking for an alternative to the built-in Proxmox Backup Server4 points

-

Dear @Argon, Thank you for your honest feedback. This helps us understand what our users need and how to improve our product moving forward. We have tried to be as clear as possible that this is only an agent-based approach to backing up Proxmox VE, and wanted to inform users who may not be aware of this approach in NAKIVO Backup & Replication. As for the native Backup Server Tool, it may have some advantages but it lacks several important capabilities such as multiple backup targets (for example, cloud) or recovery options (for example, granular recovery of app objects). We are continuously working on improving our software solution, and we are investigating native Proxmox support. Thank you once again for your input. Best regards4 points

-

We’re excited to announce that NAKIVO has expanded the range of supported platforms to include Proxmox VE:4 points

-

I asked the NAKIVO sales team about Proxmox support recently. They said native support is on their roadmap but no ETA yet.4 points

-

Hello, community, let's gather here general and more scecific tips on how to speed up backups. Any suggestions and comments will be helpful. Thank you3 points

-

I've just uploaded almost 20GB of very important data on OneDrive. Documents, xlsx, pdfs mostly... Now I wonder about OneDrive's security and reliability. What is your point of view? Should I keep one more copy in other place?3 points

-

Hello, @Loki Rodriguez, great idea! From my side I can recommend reading this worthwhile blog posts and references: How to Increase VM Backup Speed Increasing VM Backup and Replication Speed with Network Acceleration Network Acceleration Best Practices for Efficient Hyper-V VM Backup Advanced Bandwidth Throttling VMware Backup: Top 10 Best Practices Hyper-V Best Practices for Administration An Overview of Microsoft Office 365 Backup Best Practices3 points

-

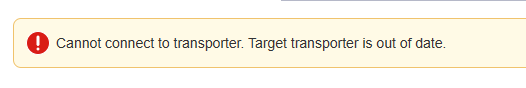

Hi, I have a problem, apparently of incompatibility of versions, I am working on the most recent version of nakivo, that is 10.11 and I have two nas devices, one with transporter version 10.8 and another with version 10.5, when I try to add them to the nodes, I get the following error. My solution is to downgrade the version to 10.8 or similar, but I can't find the resources on the official website. Could you help me get past versions of nakivo? If you guys have any other solution ideas or suggestions, I'm open to trying.2 points

-

Hello @Ana Jaas, thanks for reaching out. To be able to provide the steps to take, we need more information about your NAS devices. Could you please provide us with the specifications of your NAS devices? We'll need details such as the model, operating system version, and CPU version. This information will enable us to guide you through updating the Transporter to match the version of the Director. Alternatively, you can navigate to Expert Mode: https://helpcenter.nakivo.com/User-Guide/Content/Settings/Expert-Mode.htm?Highlight=expert mode and enable system.transporter.allow.old to address the compatibility issue. If you find these steps challenging or would prefer direct assistance, we're more than willing to schedule a remote session to help you navigate through the process: support@nakivo.com. Looking forward to your response.2 points

-

I think the developer made a fundamental mistake here. The repository should be only responsible for storage type (Disk or tape), where the data is stored (local, NAS or cloud), and how the data is stored (Compression and Encryption). The backup job should determine how the data is structured. "Forever incremental" and "Incremental with full" options should be within the job schedule section, and the retention policy determines how long the data should be kept. To determine if the data "Forever incremental" or "Incremental with full" should be the function of the backup job itself, not the function of the repository.2 points

-

Hi Nakivo Is it possible to add MFA option for the Webinterface. I have some customers, where MFA is required for the backup. Thanks Mario2 points

-

Hi Tape Volume doesn't move to original slot when it removed from drive.2 points

-

I'm perfectly agree with TonioRoffo and Jim. Deduplication efficiency and incremental forever has been, from the beginning, one of the main reasons that made me choose Nakivo as the number one backup solution for my customers. The obligation to periodically perform full backups was, for some of my customers, extremely boring for obvious reasons of time and space consumption. Now I find myself having to destroy the repositories with all my backups to recreate them with the correct settings in order to be able to use them in "incremental forever" as I was always used to on previous versions from Nakivo. I think the change was indeed a bad idea.2 points

-

Could you please do a video for Nakivo B&R to Microsoft Azure ?2 points

-

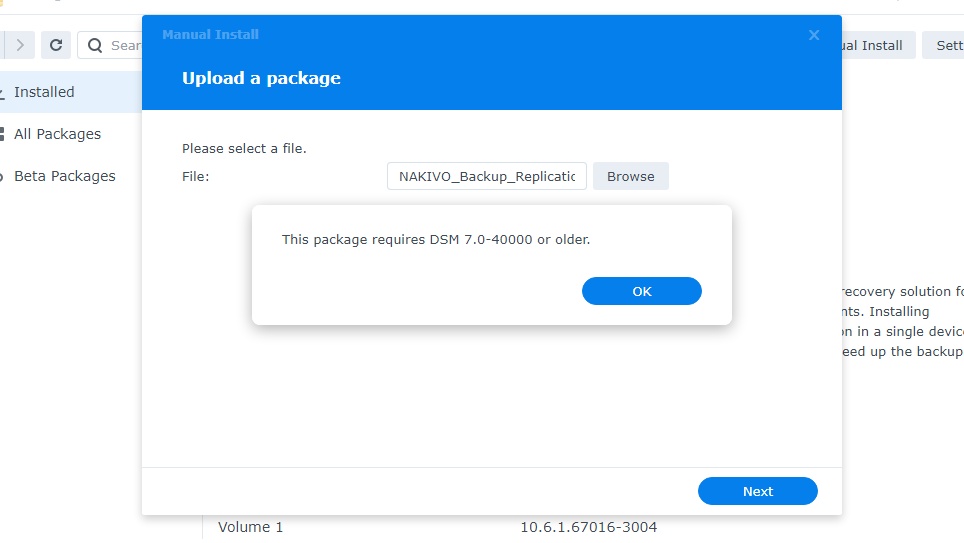

Could I suggest you include these instructions for manual download and installation on DSM 7 for the current and future versions on the main Updates page (with suitable caveats/warnings). The Synology approval process is clear far too slow (it can take months for an update to appear, and even then it tends to lag behind the current version), so I suspect most of us using Nakivo on synology are now updating manually (and how to do so is becoming a FAQ). Matthew2 points

-

2 points

-

2 points

-

@Official Moderator Nice tips. One more from me - use two-factor verification. It is a must2 points

-

Hello, @Tayler Odling, of course if your data is important for you, keep it in multiple places, I recommend to have at least three copies of it, save it to two different types of media and at least one copy offsite. About Microsoft OneDrive, my point of view it is as safe as any other storage, but you should not rely only on one spot, though it provides encryption. Please, find great tips on OneDrive data safety and a list of the common mistakes that can influence your cloud security here: https://www.nakivo.com/blog/microsoft-onedrive-security/ Let me know if you need any additional assistance from me. I look forward to hearing from you, Tayler.2 points

-

When I created this thread, I thought we would be mostly speaking about network load and its critical impact on backups. (Update: Yes, I have found this information if one of the references by @Official Moderator) Reducing the network load is the first I would do in a situation when you want to increase backup server performance.2 points

-

Hello, @Hakim, I think you should find out more information about NAKIVO Backup for Ransomware Protection: https://www.nakivo.com/ransomware-protection/ Anti-ransomware software alone is not sufficient to protect your organization's data. Also I recommend reading best practices for ransomware protection and recovery: https://www.nakivo.com/ransomware-protection/white-paper/best-practices-for-ransomware-protection-and-recovery/ Sorry for the offtop, I just wanted to support your remark2 points

-

Hello, guys two remarks from me - on one hand, anti-virus solutions can slow down saving and reading data from a disk, but on the other hand, ransomware issues can cause performance degradation. So you should always keep the balance2 points

-

Hello, @tommy.cash, thank you for your post. Here is the list of the possible questions you should ask yourself before creating the Backup Policy of your University: 1) Frequency of system/application backups. 2) Frequency of network file backups. 3) Frequency of email backups. 4) Frequency of desktop backups. 5) Storage. 6) Recovery Testing. About Microsoft 365 Backup Policies - there are inbuilt configuration options in your account which can help to prevent data loss. Due to Microsoft's Shared Responsibility Model, it is necessary also to have a backup of your Microsoft 365 data, using a third-party solution as NAKIVO Backup & Replication. Please, check a useful how-to guide on setting up Microsoft 365 backup policies: https://www.nakivo.com/blog/setting-up-microsoft-office-365-backup-policies/2 points

-

@Bedders, Please try replacing "q" with "-q": zip -q -d log4j-core*.jar org/apache/logging/log4j/core/lookup/JndiLookup.class Let me know if it works for you!2 points

-

Hi, @JurajZ and @Bedders! NAKIVO Backup & Replication is using the Apache Log4j library, which is part of Apache Logging Services. You can manually fix the CVE-2021-44228 vulnerability by removing JndiLookup.class located in libs\log4j-core-2.2.jar. Note: If the libs folder contains log4j-core-fixed-2.2.jar instead of log4j-core-2.2.jar, it means that the issue was already fixed for your version of NAKIVO Backup & Replication. For Linux: Go to the libs folder located inside NAKIVO Backup & Replication installation folder. To remove JndiLookup.class from the jar file run the following command: zip -q -d log4j-core*.jar org/apache/logging/log4j/core/lookup/JndiLookup.class For Windows: Ensure you have 7z tool installed. Go to the libs folder located inside NAKIVO Backup & Replication installation folder. Use 7z to open the log4j-core-2.2.jar and remove JndiLookup.class from the jar file. Restart NAKIVO Backup & Replication. For NAS devices: If you are using a NAS, open an SSH connection to your device and locate NAKIVO Backup & Replication installation folder here: For ASUSTOR NAS: /usr/local/AppCentral/NBR For FreeNAS/TrueNAS (inside the jail): /usr/local/nakivo/director For NETGEAR NAS: /apps/nbr For QNAP NAS: /share/CACHEDEV1_DATA/.qpkg/NBR For Raspberry PI: /opt/nakivo/director For Synology NAS: /volume1/@appstore/NBR For Western Digital NAS: /mnt/HD/HD_a2/Nas_Prog/NBR Note: Refer to the NAS vendor documentation to learn how to open an SSH connection to your NAS device. IMPORTANT: CVE-2021-44228 is a severe vulnerability. We strongly advise you to apply the manual fix as soon as you can. This is the best way to avoid the risks of security breaches. Please contact customer support if you require custom build of NAKIVO Backup & Replication that has the fix.2 points

-

Hi, @SALEEL! I am very sorry that we put you in such a position. The latest improvements in DSM7 were unexpected for everyone. And it makes us feel very sad that we can't provide you with our best service as usual. Our team was doing everything to accelerate the process. However, at this point, there is nothing we can do. I understand your disappointment, and I apologize for all the inconvenience it has caused you. One of our main values is customers satisfaction. That's why this situation is frustrating. If only it depended on us! At the moment I can only offer you the following options: 1. You can temporarily install NAKIVO Backup & Replication on some other host and use the NAS-based repository as a CIFS/NFS share. When DSM7 is officially supported, then it will be possible to install NAKIVO on NAS again. 2. Workaround for DSM 7: - Deploying a new NAKIVO installation somewhere else as Virtual Appliance (VA) or installing on a supported VM, Physical or NAS; - Share the repository folder on the Synology NAS with DSM7 as NFS / CIFS (SMB) share; - Add this repository as existing to the new NAKIVO installation; - Restore the configuration of old NAKIVO installation from Self-backup from the original repository. For now, we are working closely with Synology to prepare the release, and we are almost at the final stage. However, we expect that we will need a few more weeks till the final release. Again, I am very sorry for all the convenience!2 points

-

2 points

-

Great to get 2FA for the Login Just found the option Store backups in separate files: Select this option to enable this backup repository to store data of every machine in separate backup files. Enabling this option is highly recommended to ensure higher reliability and performance. Why this is more reliable? Does this have an effect on the global dedup? And can I convert the curent backup to this option? From my point of view, the Repos are the current bottleneck of Nakivo. if there is an improvement with this, it is very welcome!2 points

-

2 points

-

Here is how to fix it... for QNAP Login to QNAP via SSH admin cd /share/CACHEDEV1_DATA/.qpkg/NBR/transporter # ./nkv-bhsvc stop Stopping NAKIVO Backup & Replication Transporter service: [/share/CACHEDEV1_DATA/.qpkg/NBR/transporter] # ./bhsvc -b "<UsePassword>" [/share/CACHEDEV1_DATA/.qpkg/NBR/transporter] # ./nkv-bhsvc start Starting NAKIVO Backup & Replication Transporter service: Use Crome Browser https://<qnapip>:4443 Go to Settings / Transporters / Onboard transporter / Edit Press F12 -> go to Console and type following JavaScript codes (Paste one by one command and Press Enter): var a=Ext.ComponentQuery.query("transporterEditView")[0] a.masterPasswordContainer.show() a.connectBtn.show() you get new fields in the Browser: - Master password: - Connect Button In Master password: <ThePassworfromTheSSHCommand> (Same as used here in the bhsvc -b "<UsePassword>") Press Connect Button Then Refresh Transporter an Repository by Boris2 points

-

Anyone else have problems with Grand-Father-Son (GFS) retention scheme not working as expected? Daily backups work correctly and the retention is correct, but instead of getting weekly and Monthly backups, all my Full backups are set to yearly week after week at both my sites where I have tape libraries. (They expire 11 years from now) I opened a ticket, but they were not able to tell me anything and claimed that everything was working fine. At the time I was not doing daily backups, so I turned that on and they work, but they didn't affect my problem with yearly backups, so for now I'm turning it off to see what happens with just weekly and monthly backups. These are my settings: Retain one recovery point per day for 7 days Retain one recovery point per week for 4 weeks Retain one recovery point per month for 12 months Retain one recovery point per year for 11 years Tape Job Options: Create Full Backup: Every Saturday Tape appending: Start full backup with an empty tape** **I found that now that daily backups are turned on, Nakivo will start writing daily backups to my Full Backup tape before I get a chance to eject it. This is not desirable, but there is no options to segregate GFS tapes. Setting this to "Always start with an empty tape" would burn a tape each day, also not desirable, but I may have no choice. I would like to append all daily backups to the same tape and keep it in the library and only offsite my weekend full backups.2 points

-

In a multi-tenant environment, if vpn drops for any reasons (isp problem, customer's ac problem, etc) the sheduled jobs will start in time or they will wait director command to start? thank's and best regards2 points

-

The shortest way to integrate this without going vendor specific, is adding SAML 2.0. This would allow logins based on Microsoft 365 or other MFA vendors.2 points

-

2 points

-

It is something that i may be interested in but i would like to see a "how to" video on the process on your youtube. I saw that you have a "how to" video on amazon ec2 backups (which is old now by the way)2 points

-

Thank you. Someone from Nakivo Support helped me already. This is what I did: to increase java memory on NAKIVO application as following : 1. Stop NAKIVO service from the AppCenter 2. Connect to Synology via SSH using "root" 3. edit nkv-dirsvc file ( mostly that file is located in /volume1/@appstore/NBR/nkv-dirsvc ) and replace: SVC_OPTIONS="-Dfile.encoding=UTF-8 -server -XX:+AggressiveOpts -XX:-OmitStackTraceInFastThrow -Xnoagent -Xss320k -Xms128m -Xmx320m" with: SVC_OPTIONS="-Dfile.encoding=UTF-8 -server -XX:+AggressiveOpts -XX:-OmitStackTraceInFastThrow -Xnoagent -Xss320k -Xms128m -Xmx640m" 4. Start NAKIVO service Thank you. Juraj.2 points

-

2 points

-

NAKIVO Backup & Replication v9.0 allows you to perform an incremental, application-aware backup of Windows Servers. With our solution, you can instantly recover files as well as application objects! Download our full-featured free trial to try out NAKIVO Backup & Replication for yourself: https://www.nakivo.com/resources/releases/v9.0/2 points

-

NIST 800-171 and CMMC requires that we encrypt our tapes to protect data at rest. Is that feature on the roadmap?1 point

-

1 point

-

I am running a Hyper-V VM backup to a USB HDD drive for testing on a cleanly formatted disk. After running 1 backup of 3 VM's (about 100GB) Windows is reporting that the drive is 97% fragmented. Is this normal/expected? Should we be defragging often? USB HDD Repository is set at Best compression with store as separate files disabled. Thanks!1 point

-

@Loki Rodriguez, My main principle is to ensure the complete monitoring of all the systems: hardware monitoring, network monitoring, security monitoring, critical activity monitoring, application monitoring and uptime monitoring. And then, there are multiple good tools to keep track of each parameter.1 point

-

Feeding back that changing q to -q worked, many thanks! It's probably worth mentioning that the email you sent round contained this error, as well as the forum post - in case you've not been inundated with support calls about it already!1 point

-

Thanks. This does raise some general concerns: i) Will Nakivo have to go through this approval process every time there is an update to the software? Unless Synology streamlines its approval process, at the current rate this would mean that the Synology version is about three months behind the released version. This may not be an issue with feature release upgrade (others may have different views) but could be problematic with bug fix or security updates; particularly given that vCenter updates quit typically break things requiring an updated Nakivo. ii) When I've had to approach Nakivo support, I've found them extremely responsive. Part of the trouble-shooting process often involves installing pre-release, beta or bespoke builds of the software. I can't see how this would work if there isn't a means for Synology to use external installers. I hope these issues can be resolved soon - to be honest, this is questioning my decision to go with Synology rather than Nakivo. When I need to update\replace my NAS, I'll certainly be looking at what other options there are. Matthew1 point

-

Dear Moderator, as stated above - it worked for me. I just moved the installer from root (/) to /home and there I could start the installer without problems. Thanks a lot.1 point

-

1 point

-

My apologies, I forgot to reply here. Updating for anyone in the future. We upgraded NAKIVO B&R to v10.0.0 which resolved the issue. VM's are now all on Server 2019 hosts and boot verification is working as expected.1 point

-

ok, i deployed the transpoters but i see no change.. Nakivo is installed locally on a storage1, that provides datastore via NFS to the 2 esxi hosts i also have another storage2 that provides datastore via NFS to the 2 esxi hosts, but for this test, im not using it. I have VMs on esxi1, with datastore1, and when i backup them, they go at very low speed, and use the network all nakivo has to do is copy the snapshots locally, as the datastore and nakivo are the same server.. what am i missing?1 point

.thumb.png.06d8fba2efcf99a2e716d853feb8fb0f.png)

.thumb.jpg.912b3e06ab501bee4265e599dfdc4730.jpg)

.thumb.jpg.85d324dd9b11ed745206f8774b7ed46c.jpg)

.thumb.jpg.74e6a501368545ab5927fe6cabcc56d8.jpg)